|

| virtual | ~homographyNet () |

| | Destroy. More...

|

| |

| bool | FindDisplacement (float *imageA, float *imageB, uint32_t width, uint32_t height, float displacement[8]) |

| | Find the displacement from imageA to imageB. More...

|

| |

| bool | FindHomography (float *imageA, float *imageB, uint32_t width, uint32_t height, float H[3][3]) |

| | Find the homography that warps imageA to imageB. More...

|

| |

| bool | FindHomography (float *imageA, float *imageB, uint32_t width, uint32_t height, float H[3][3], float H_inv[3][3]) |

| | Find the homography (and it's inverse) that warps imageA to imageB. More...

|

| |

| bool | ComputeHomography (const float displacement[8], float H[3][3]) |

| | Given the displacement from FindDisplacement(), compute the homography. More...

|

| |

| bool | ComputeHomography (const float displacement[8], float H[3][3], float H_inv[3][3]) |

| | Given the displacement from FindDisplacement(), compute the homography and it's inverse. More...

|

| |

| virtual | ~tensorNet () |

| | Destory. More...

|

| |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean=NULL, const char *input_blob="data", const char *output_blob="prob", uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| | Load a new network instance. More...

|

| |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const char *input_blob, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| | Load a new network instance with multiple output layers. More...

|

| |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const char *input_blob, const Dims3 &input_dims, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| | Load a new network instance (this variant is used for UFF models) More...

|

| |

| void | EnableLayerProfiler () |

| | Manually enable layer profiling times. More...

|

| |

| void | EnableDebug () |

| | Manually enable debug messages and synchronization. More...

|

| |

| bool | AllowGPUFallback () const |

| | Return true if GPU fallback is enabled. More...

|

| |

| deviceType | GetDevice () const |

| | Retrieve the device being used for execution. More...

|

| |

| precisionType | GetPrecision () const |

| | Retrieve the type of precision being used. More...

|

| |

| bool | IsPrecision (precisionType type) const |

| | Check if a particular precision is being used. More...

|

| |

| cudaStream_t | GetStream () const |

| | Retrieve the stream that the device is operating on. More...

|

| |

| cudaStream_t | CreateStream (bool nonBlocking=true) |

| | Create and use a new stream for execution. More...

|

| |

| void | SetStream (cudaStream_t stream) |

| | Set the stream that the device is operating on. More...

|

| |

| const char * | GetPrototxtPath () const |

| | Retrieve the path to the network prototxt file. More...

|

| |

| const char * | GetModelPath () const |

| | Retrieve the path to the network model file. More...

|

| |

| modelType | GetModelType () const |

| | Retrieve the format of the network model. More...

|

| |

| bool | IsModelType (modelType type) const |

| | Return true if the model is of the specified format. More...

|

| |

| float | GetNetworkTime () |

| | Retrieve the network runtime (in milliseconds). More...

|

| |

| float2 | GetProfilerTime (profilerQuery query) |

| | Retrieve the profiler runtime (in milliseconds). More...

|

| |

| float | GetProfilerTime (profilerQuery query, profilerDevice device) |

| | Retrieve the profiler runtime (in milliseconds). More...

|

| |

| void | PrintProfilerTimes () |

| | Print the profiler times (in millseconds). More...

|

| |

|

| static NetworkType | NetworkTypeFromStr (const char *model_name) |

| | Parse a string to one of the built-in pretrained models. More...

|

| |

| static homographyNet * | Create (NetworkType networkType=WEBCAM_320, uint32_t maxBatchSize=1, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true) |

| | Load a new network instance. More...

|

| |

| static homographyNet * | Create (const char *model_path, const char *input=HOMOGRAPHY_NET_DEFAULT_INPUT, const char *output=HOMOGRAPHY_NET_DEFAULT_OUTPUT, uint32_t maxBatchSize=1, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true) |

| | Load a custom network instance. More...

|

| |

| static homographyNet * | Create (int argc, char **argv) |

| | Load a new network instance by parsing the command line. More...

|

| |

| static precisionType | FindFastestPrecision (deviceType device=DEVICE_GPU, bool allowInt8=true) |

| | Determine the fastest native precision on a device. More...

|

| |

| static std::vector< precisionType > | DetectNativePrecisions (deviceType device=DEVICE_GPU) |

| | Detect the precisions supported natively on a device. More...

|

| |

| static bool | DetectNativePrecision (const std::vector< precisionType > &nativeTypes, precisionType type) |

| | Detect if a particular precision is supported natively. More...

|

| |

| static bool | DetectNativePrecision (precisionType precision, deviceType device=DEVICE_GPU) |

| | Detect if a particular precision is supported natively. More...

|

| |

|

| | homographyNet () |

| |

| | tensorNet () |

| | Constructor. More...

|

| |

| bool | ProfileModel (const std::string &deployFile, const std::string &modelFile, const char *input, const Dims3 &inputDims, const std::vector< std::string > &outputs, uint32_t maxBatchSize, precisionType precision, deviceType device, bool allowGPUFallback, nvinfer1::IInt8Calibrator *calibrator, std::ostream &modelStream) |

| | Create and output an optimized network model. More...

|

| |

| void | PROFILER_BEGIN (profilerQuery query) |

| | Begin a profiling query, before network is run. More...

|

| |

| void | PROFILER_END (profilerQuery query) |

| | End a profiling query, after the network is run. More...

|

| |

| bool | PROFILER_QUERY (profilerQuery query) |

| | Query the CUDA part of a profiler query. More...

|

| |

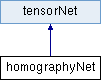

Homography estimation networks with TensorRT support.

Public Member Functions inherited from tensorNet

Public Member Functions inherited from tensorNet Static Public Member Functions inherited from tensorNet

Static Public Member Functions inherited from tensorNet Protected Member Functions inherited from tensorNet

Protected Member Functions inherited from tensorNet Protected Attributes inherited from tensorNet

Protected Attributes inherited from tensorNet