|

Jetson Inference

DNN Vision Library

|

|

Jetson Inference

DNN Vision Library

|

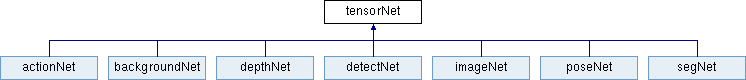

Abstract class for loading a tensor network with TensorRT. More...

#include <tensorNet.h>

Classes | |

| struct | layerInfo |

| class | Logger |

| Logger class for GIE info/warning/errors. More... | |

| class | Profiler |

| Profiler interface for measuring layer timings. More... | |

Public Member Functions | |

| virtual | ~tensorNet () |

| Destory. More... | |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean=NULL, const char *input_blob="data", const char *output_blob="prob", uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| Load a new network instance. More... | |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const char *input_blob, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| Load a new network instance with multiple output layers. More... | |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const std::vector< std::string > &input_blobs, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| Load a new network instance with multiple input layers. More... | |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const char *input_blob, const Dims3 &input_dims, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| Load a new network instance (this variant is used for UFF models) More... | |

| bool | LoadNetwork (const char *prototxt, const char *model, const char *mean, const std::vector< std::string > &input_blobs, const std::vector< Dims3 > &input_dims, const std::vector< std::string > &output_blobs, uint32_t maxBatchSize=DEFAULT_MAX_BATCH_SIZE, precisionType precision=TYPE_FASTEST, deviceType device=DEVICE_GPU, bool allowGPUFallback=true, nvinfer1::IInt8Calibrator *calibrator=NULL, cudaStream_t stream=NULL) |

| Load a new network instance with multiple input layers (used for UFF models) More... | |

| bool | LoadEngine (const char *engine_filename, const std::vector< std::string > &input_blobs, const std::vector< std::string > &output_blobs, nvinfer1::IPluginFactory *pluginFactory=NULL, deviceType device=DEVICE_GPU, cudaStream_t stream=NULL) |

| Load a network instance from a serialized engine plan file. More... | |

| bool | LoadEngine (char *engine_stream, size_t engine_size, const std::vector< std::string > &input_blobs, const std::vector< std::string > &output_blobs, nvinfer1::IPluginFactory *pluginFactory=NULL, deviceType device=DEVICE_GPU, cudaStream_t stream=NULL) |

| Load a network instance from a serialized engine plan file. More... | |

| bool | LoadEngine (nvinfer1::ICudaEngine *engine, const std::vector< std::string > &input_blobs, const std::vector< std::string > &output_blobs, deviceType device=DEVICE_GPU, cudaStream_t stream=NULL) |

| Load network resources from an existing TensorRT engine instance. More... | |

| bool | LoadEngine (const char *filename, char **stream, size_t *size) |

| Load a serialized engine plan file into memory. More... | |

| void | EnableLayerProfiler () |

| Manually enable layer profiling times. More... | |

| void | EnableDebug () |

| Manually enable debug messages and synchronization. More... | |

| bool | AllowGPUFallback () const |

| Return true if GPU fallback is enabled. More... | |

| deviceType | GetDevice () const |

| Retrieve the device being used for execution. More... | |

| precisionType | GetPrecision () const |

| Retrieve the type of precision being used. More... | |

| bool | IsPrecision (precisionType type) const |

| Check if a particular precision is being used. More... | |

| cudaStream_t | GetStream () const |

| Retrieve the stream that the device is operating on. More... | |

| cudaStream_t | CreateStream (bool nonBlocking=true) |

| Create and use a new stream for execution. More... | |

| void | SetStream (cudaStream_t stream) |

| Set the stream that the device is operating on. More... | |

| const char * | GetPrototxtPath () const |

| Retrieve the path to the network prototxt file. More... | |

| const char * | GetModelPath () const |

| Retrieve the full path to model file, including the filename. More... | |

| const char * | GetModelFilename () const |

| Retrieve the filename of the file, excluding the directory. More... | |

| modelType | GetModelType () const |

| Retrieve the format of the network model. More... | |

| bool | IsModelType (modelType type) const |

| Return true if the model is of the specified format. More... | |

| uint32_t | GetInputLayers () const |

| Retrieve the number of input layers to the network. More... | |

| uint32_t | GetOutputLayers () const |

| Retrieve the number of output layers to the network. More... | |

| Dims3 | GetInputDims (uint32_t layer=0) const |

| Retrieve the dimensions of network input layer. More... | |

| uint32_t | GetInputWidth (uint32_t layer=0) const |

| Retrieve the width of network input layer. More... | |

| uint32_t | GetInputHeight (uint32_t layer=0) const |

| Retrieve the height of network input layer. More... | |

| uint32_t | GetInputSize (uint32_t layer=0) const |

| Retrieve the size (in bytes) of network input layer. More... | |

| float * | GetInputPtr (uint32_t layer=0) const |

| Get the CUDA pointer to the input layer's memory. More... | |

| Dims3 | GetOutputDims (uint32_t layer=0) const |

| Retrieve the dimensions of network output layer. More... | |

| uint32_t | GetOutputWidth (uint32_t layer=0) const |

| Retrieve the width of network output layer. More... | |

| uint32_t | GetOutputHeight (uint32_t layer=0) const |

| Retrieve the height of network output layer. More... | |

| uint32_t | GetOutputSize (uint32_t layer=0) const |

| Retrieve the size (in bytes) of network output layer. More... | |

| float * | GetOutputPtr (uint32_t layer=0) const |

| Get the CUDA pointer to the output memory. More... | |

| float | GetNetworkFPS () |

| Retrieve the network frames per second (FPS). More... | |

| float | GetNetworkTime () |

| Retrieve the network runtime (in milliseconds). More... | |

| const char * | GetNetworkName () const |

| Retrieve the network name (it's filename). More... | |

| float2 | GetProfilerTime (profilerQuery query) |

| Retrieve the profiler runtime (in milliseconds). More... | |

| float | GetProfilerTime (profilerQuery query, profilerDevice device) |

| Retrieve the profiler runtime (in milliseconds). More... | |

| void | PrintProfilerTimes () |

| Print the profiler times (in millseconds). More... | |

Static Public Member Functions | |

| static bool | LoadClassLabels (const char *filename, std::vector< std::string > &descriptions, int expectedClasses=-1) |

| Load class descriptions from a label file. More... | |

| static bool | LoadClassLabels (const char *filename, std::vector< std::string > &descriptions, std::vector< std::string > &synsets, int expectedClasses=-1) |

| Load class descriptions and synset strings from a label file. More... | |

| static bool | LoadClassColors (const char *filename, float4 *colors, int expectedClasses, float defaultAlpha=255.0f) |

| Load class colors from a text file. More... | |

| static bool | LoadClassColors (const char *filename, float4 **colors, int expectedClasses, float defaultAlpha=255.0f) |

| Load class colors from a text file. More... | |

| static float4 | GenerateColor (uint32_t classID, float alpha=255.0f) |

| Procedurally generate a color for a given class index with the specified alpha value. More... | |

| static precisionType | SelectPrecision (precisionType precision, deviceType device=DEVICE_GPU, bool allowInt8=true) |

| Resolve a desired precision to a specific one that's available. More... | |

| static precisionType | FindFastestPrecision (deviceType device=DEVICE_GPU, bool allowInt8=true) |

| Determine the fastest native precision on a device. More... | |

| static std::vector< precisionType > | DetectNativePrecisions (deviceType device=DEVICE_GPU) |

| Detect the precisions supported natively on a device. More... | |

| static bool | DetectNativePrecision (const std::vector< precisionType > &nativeTypes, precisionType type) |

| Detect if a particular precision is supported natively. More... | |

| static bool | DetectNativePrecision (precisionType precision, deviceType device=DEVICE_GPU) |

| Detect if a particular precision is supported natively. More... | |

Protected Member Functions | |

| tensorNet () | |

| Constructor. More... | |

| bool | ProcessNetwork (bool sync=true) |

| Execute processing of the network. More... | |

| bool | ProfileModel (const std::string &deployFile, const std::string &modelFile, const std::vector< std::string > &inputs, const std::vector< Dims3 > &inputDims, const std::vector< std::string > &outputs, uint32_t maxBatchSize, precisionType precision, deviceType device, bool allowGPUFallback, nvinfer1::IInt8Calibrator *calibrator, char **engineStream, size_t *engineSize) |

| Create and output an optimized network model. More... | |

| bool | ConfigureBuilder (nvinfer1::IBuilder *builder, uint32_t maxBatchSize, uint32_t workspaceSize, precisionType precision, deviceType device, bool allowGPUFallback, nvinfer1::IInt8Calibrator *calibrator) |

| Configure builder options. More... | |

| bool | ValidateEngine (const char *model_path, const char *cache_path, const char *checksum_path) |

| Validate that the model already has a built TensorRT engine that exists and doesn't need updating. More... | |

| void | PROFILER_BEGIN (profilerQuery query) |

| Begin a profiling query, before network is run. More... | |

| void | PROFILER_END (profilerQuery query) |

| End a profiling query, after the network is run. More... | |

| bool | PROFILER_QUERY (profilerQuery query) |

| Query the CUDA part of a profiler query. More... | |

Protected Attributes | |

| tensorNet::Logger | gLogger |

| tensorNet::Profiler | gProfiler |

| std::string | mPrototxtPath |

| std::string | mModelPath |

| std::string | mModelFile |

| std::string | mMeanPath |

| std::string | mCacheEnginePath |

| std::string | mCacheCalibrationPath |

| std::string | mChecksumPath |

| deviceType | mDevice |

| precisionType | mPrecision |

| modelType | mModelType |

| cudaStream_t | mStream |

| cudaEvent_t | mEventsGPU [PROFILER_TOTAL *2] |

| timespec | mEventsCPU [PROFILER_TOTAL *2] |

| nvinfer1::IRuntime * | mInfer |

| nvinfer1::ICudaEngine * | mEngine |

| nvinfer1::IExecutionContext * | mContext |

| float2 | mProfilerTimes [PROFILER_TOTAL+1] |

| uint32_t | mProfilerQueriesUsed |

| uint32_t | mProfilerQueriesDone |

| uint32_t | mWorkspaceSize |

| uint32_t | mMaxBatchSize |

| bool | mEnableProfiler |

| bool | mEnableDebug |

| bool | mAllowGPUFallback |

| void ** | mBindings |

| std::vector< layerInfo > | mInputs |

| std::vector< layerInfo > | mOutputs |

Abstract class for loading a tensor network with TensorRT.

For example implementations,

|

virtual |

Destory.

|

protected |

Constructor.

|

inline |

Return true if GPU fallback is enabled.

|

protected |

Configure builder options.

| cudaStream_t tensorNet::CreateStream | ( | bool | nonBlocking = true | ) |

Create and use a new stream for execution.

|

static |

Detect if a particular precision is supported natively.

|

static |

Detect if a particular precision is supported natively.

|

static |

Detect the precisions supported natively on a device.

| void tensorNet::EnableDebug | ( | ) |

Manually enable debug messages and synchronization.

| void tensorNet::EnableLayerProfiler | ( | ) |

Manually enable layer profiling times.

|

static |

Determine the fastest native precision on a device.

|

static |

Procedurally generate a color for a given class index with the specified alpha value.

This function can be used to generate a range of colors when a colors.txt file isn't available.

|

inline |

Retrieve the device being used for execution.

|

inline |

Retrieve the dimensions of network input layer.

|

inline |

Retrieve the height of network input layer.

|

inline |

Retrieve the number of input layers to the network.

|

inline |

Get the CUDA pointer to the input layer's memory.

|

inline |

Retrieve the size (in bytes) of network input layer.

|

inline |

Retrieve the width of network input layer.

|

inline |

Retrieve the filename of the file, excluding the directory.

|

inline |

Retrieve the full path to model file, including the filename.

|

inline |

Retrieve the format of the network model.

|

inline |

Retrieve the network frames per second (FPS).

|

inline |

Retrieve the network name (it's filename).

|

inline |

Retrieve the network runtime (in milliseconds).

|

inline |

Retrieve the dimensions of network output layer.

|

inline |

Retrieve the height of network output layer.

|

inline |

Retrieve the number of output layers to the network.

|

inline |

Get the CUDA pointer to the output memory.

|

inline |

Retrieve the size (in bytes) of network output layer.

|

inline |

Retrieve the width of network output layer.

|

inline |

Retrieve the type of precision being used.

|

inline |

Retrieve the profiler runtime (in milliseconds).

|

inline |

Retrieve the profiler runtime (in milliseconds).

|

inline |

Retrieve the path to the network prototxt file.

|

inline |

Retrieve the stream that the device is operating on.

|

inline |

Return true if the model is of the specified format.

|

inline |

Check if a particular precision is being used.

|

static |

Load class colors from a text file.

If the number of expected colors aren't parsed, they will be generated. The float4 color array will automatically be allocated in shared CPU/GPU memory by cudaAllocMapped(). If a line in the text file only has RGB, then the defaultAlpha value will be used for the alpha channel.

|

static |

Load class colors from a text file.

If the number of expected colors aren't parsed, they will be generated. The float4 color array should be expectedClasses long, and would typically be in shared CPU/GPU memory. If a line in the text file only has RGB, then the defaultAlpha value will be used for the alpha channel.

|

static |

Load class descriptions from a label file.

Each line of the text file should include one class label (and optionally a synset). If the number of expected labels aren't parsed, they will be automatically generated.

|

static |

Load class descriptions and synset strings from a label file.

Each line of the text file should include one class label (and optionally a synset). If the number of expected labels aren't parsed, they will be automatically generated.

| bool tensorNet::LoadEngine | ( | char * | engine_stream, |

| size_t | engine_size, | ||

| const std::vector< std::string > & | input_blobs, | ||

| const std::vector< std::string > & | output_blobs, | ||

| nvinfer1::IPluginFactory * | pluginFactory = NULL, |

||

| deviceType | device = DEVICE_GPU, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a network instance from a serialized engine plan file.

| engine_stream | Memory containing the serialized engine plan file. |

| engine_size | Size of the serialized engine stream (in bytes). |

| input_blobs | List of names of the inputs blob data to the network. |

| output_blobs | List of names of the output blobs from the network. |

| bool tensorNet::LoadEngine | ( | const char * | engine_filename, |

| const std::vector< std::string > & | input_blobs, | ||

| const std::vector< std::string > & | output_blobs, | ||

| nvinfer1::IPluginFactory * | pluginFactory = NULL, |

||

| deviceType | device = DEVICE_GPU, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a network instance from a serialized engine plan file.

| engine_filename | path to the serialized engine plan file. |

| input_blobs | List of names of the inputs blob data to the network. |

| output_blobs | List of names of the output blobs from the network. |

| bool tensorNet::LoadEngine | ( | const char * | filename, |

| char ** | stream, | ||

| size_t * | size | ||

| ) |

Load a serialized engine plan file into memory.

| bool tensorNet::LoadEngine | ( | nvinfer1::ICudaEngine * | engine, |

| const std::vector< std::string > & | input_blobs, | ||

| const std::vector< std::string > & | output_blobs, | ||

| deviceType | device = DEVICE_GPU, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load network resources from an existing TensorRT engine instance.

| engine_stream | Memory containing the serialized engine plan file. |

| engine_size | Size of the serialized engine stream (in bytes). |

| input_blobs | List of names of the inputs blob data to the network. |

| output_blobs | List of names of the output blobs from the network. |

| bool tensorNet::LoadNetwork | ( | const char * | prototxt, |

| const char * | model, | ||

| const char * | mean, | ||

| const char * | input_blob, | ||

| const Dims3 & | input_dims, | ||

| const std::vector< std::string > & | output_blobs, | ||

| uint32_t | maxBatchSize = DEFAULT_MAX_BATCH_SIZE, |

||

| precisionType | precision = TYPE_FASTEST, |

||

| deviceType | device = DEVICE_GPU, |

||

| bool | allowGPUFallback = true, |

||

| nvinfer1::IInt8Calibrator * | calibrator = NULL, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a new network instance (this variant is used for UFF models)

| prototxt | File path to the deployable network prototxt |

| model | File path to the caffemodel |

| mean | File path to the mean value binary proto (NULL if none) |

| input_blob | The name of the input blob data to the network. |

| input_dims | The dimensions of the input blob (used for UFF). |

| output_blobs | List of names of the output blobs from the network. |

| maxBatchSize | The maximum batch size that the network will be optimized for. |

| bool tensorNet::LoadNetwork | ( | const char * | prototxt, |

| const char * | model, | ||

| const char * | mean, | ||

| const char * | input_blob, | ||

| const std::vector< std::string > & | output_blobs, | ||

| uint32_t | maxBatchSize = DEFAULT_MAX_BATCH_SIZE, |

||

| precisionType | precision = TYPE_FASTEST, |

||

| deviceType | device = DEVICE_GPU, |

||

| bool | allowGPUFallback = true, |

||

| nvinfer1::IInt8Calibrator * | calibrator = NULL, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a new network instance with multiple output layers.

| prototxt | File path to the deployable network prototxt |

| model | File path to the caffemodel |

| mean | File path to the mean value binary proto (NULL if none) |

| input_blob | The name of the input blob data to the network. |

| output_blobs | List of names of the output blobs from the network. |

| maxBatchSize | The maximum batch size that the network will be optimized for. |

| bool tensorNet::LoadNetwork | ( | const char * | prototxt, |

| const char * | model, | ||

| const char * | mean, | ||

| const std::vector< std::string > & | input_blobs, | ||

| const std::vector< Dims3 > & | input_dims, | ||

| const std::vector< std::string > & | output_blobs, | ||

| uint32_t | maxBatchSize = DEFAULT_MAX_BATCH_SIZE, |

||

| precisionType | precision = TYPE_FASTEST, |

||

| deviceType | device = DEVICE_GPU, |

||

| bool | allowGPUFallback = true, |

||

| nvinfer1::IInt8Calibrator * | calibrator = NULL, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a new network instance with multiple input layers (used for UFF models)

| prototxt | File path to the deployable network prototxt |

| model | File path to the caffemodel |

| mean | File path to the mean value binary proto (NULL if none) |

| input_blobs | List of names of the inputs blob data to the network. |

| input_dims | List of the dimensions of the input blobs (used for UFF). |

| output_blobs | List of names of the output blobs from the network. |

| maxBatchSize | The maximum batch size that the network will be optimized for. |

| bool tensorNet::LoadNetwork | ( | const char * | prototxt, |

| const char * | model, | ||

| const char * | mean, | ||

| const std::vector< std::string > & | input_blobs, | ||

| const std::vector< std::string > & | output_blobs, | ||

| uint32_t | maxBatchSize = DEFAULT_MAX_BATCH_SIZE, |

||

| precisionType | precision = TYPE_FASTEST, |

||

| deviceType | device = DEVICE_GPU, |

||

| bool | allowGPUFallback = true, |

||

| nvinfer1::IInt8Calibrator * | calibrator = NULL, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a new network instance with multiple input layers.

| prototxt | File path to the deployable network prototxt |

| model | File path to the caffemodel |

| mean | File path to the mean value binary proto (NULL if none) |

| input_blobs | List of names of the inputs blob data to the network. |

| output_blobs | List of names of the output blobs from the network. |

| maxBatchSize | The maximum batch size that the network will be optimized for. |

| bool tensorNet::LoadNetwork | ( | const char * | prototxt, |

| const char * | model, | ||

| const char * | mean = NULL, |

||

| const char * | input_blob = "data", |

||

| const char * | output_blob = "prob", |

||

| uint32_t | maxBatchSize = DEFAULT_MAX_BATCH_SIZE, |

||

| precisionType | precision = TYPE_FASTEST, |

||

| deviceType | device = DEVICE_GPU, |

||

| bool | allowGPUFallback = true, |

||

| nvinfer1::IInt8Calibrator * | calibrator = NULL, |

||

| cudaStream_t | stream = NULL |

||

| ) |

Load a new network instance.

| prototxt | File path to the deployable network prototxt |

| model | File path to the caffemodel |

| mean | File path to the mean value binary proto (NULL if none) |

| input_blob | The name of the input blob data to the network. |

| output_blob | The name of the output blob data from the network. |

| maxBatchSize | The maximum batch size that the network will be optimized for. |

|

inline |

Print the profiler times (in millseconds).

|

protected |

Execute processing of the network.

| sync | if true (default), the device will be synchronized after processing and the thread/function will block until processing is complete. if false, the function will return immediately after the processing has been enqueued to the CUDA stream indicated by GetStream(). |

|

protected |

Create and output an optimized network model.

| deployFile | name for network prototxt |

| modelFile | name for model |

| outputs | network outputs |

| maxBatchSize | maximum batch size |

| modelStream | output model stream |

|

inlineprotected |

Begin a profiling query, before network is run.

|

inlineprotected |

End a profiling query, after the network is run.

|

inlineprotected |

Query the CUDA part of a profiler query.

|

static |

Resolve a desired precision to a specific one that's available.

| void tensorNet::SetStream | ( | cudaStream_t | stream | ) |

Set the stream that the device is operating on.

|

protected |

Validate that the model already has a built TensorRT engine that exists and doesn't need updating.

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |