Bio::EnsEMBL::Hive::Process Class Reference

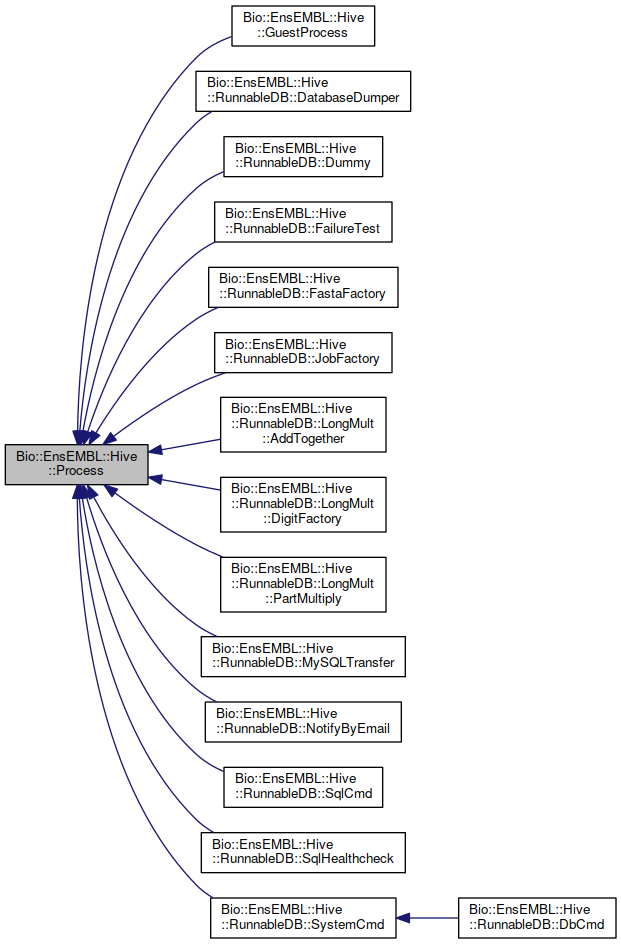

Inheritance diagram for Bio::EnsEMBL::Hive::Process:

Inheritance diagram for Bio::EnsEMBL::Hive::Process:Public Member Functions | |

| public | new () |

| public | life_cycle () |

| public | say_with_header () |

| public | enter_status () |

| public | warning () |

| public | param_defaults () |

| public | fetch_input () |

| public | run () |

| public | write_output () |

| public Bio::EnsEMBL::Hive::Worker | worker () |

| public Boolean | execute_writes () |

| public Bio::EnsEMBL::Hive::DBSQL::DBAdaptor | db () |

| public Bio::EnsEMBL::Hive::DBSQL::DBConnection | dbc () |

| public Bio::EnsEMBL::Hive::DBSQL::DBConnection | data_dbc () |

| public Bio::EnsEMBL::Hive::AnalysisJob | input_job () |

| public | input_id () |

| public | param () |

| public | param_required () |

| public | param_is_defined () |

| public | param_substitute () |

| public | dataflow_output_id () |

| public | throw () |

| public | complete_early () |

| public Int | debug () |

| public | worker_temp_directory () |

| public | worker_temp_directory_name () |

| public | cleanup_worker_temp_directory () |

Detailed Description

Description

Abstract superclass. Each Process makes up the individual building blocks

of the system. Instances of these processes are created in a hive workflow

graph of Analysis entries that are linked together with dataflow and

AnalysisCtrl rules.

Instances of these Processes are created by the system as work is done.

The newly created Process will have preset $self->db, $self->dbc, $self->input_id

and several other variables.

From this input and configuration data, each Process can then proceed to

do something. The flow of execution within a Process is:

pre_cleanup() if($retry_count>0); # clean up databases/filesystem before subsequent attempts

fetch_input(); # fetch the data from databases/filesystems

run(); # perform the main computation

write_output(); # record the results in databases/filesystems

post_cleanup(); # destroy all non-trivial data structures after the job is done

The developer can implement their own versions of

pre_cleanup, fetch_input, run, write_output, and post_cleanup to do what they need.

The entire system is based around the concept of a workflow graph which

can split and loop back on itself. This is accomplished by dataflow

rules (similar to Unix pipes) that connect one Process (or analysis) to others.

Where a Unix command line program can send output on STDOUT STDERR pipes,

a hive Process has access to unlimited pipes referenced by numerical

branch_codes. This is accomplished within the Process via

$self->dataflow_output_id(...);

The design philosophy is that each Process does its work and creates output,

but it doesn't worry about where the input came from, or where its output

goes. If the system has dataflow pipes connected, then the output jobs

have purpose, if not - the output work is thrown away. The workflow graph

'controls' the behaviour of the system, not the processes. The processes just

need to do their job. The design of the workflow graph is based on the knowledge

of what each Process does so that the graph can be correctly constructed.

The workflow graph can be constructed a priori or can be constructed and

modified by intelligent Processes as the system runs.

The Hive is based on AI concepts and modeled on the social structure and

behaviour of a honey bee hive. So where a worker honey bee's purpose is

(go find pollen, bring back to hive, drop off pollen, repeat), an ensembl-hive

worker's purpose is (find a job, create a Process for that job, run it,

drop off output job(s), repeat). While most workflow systems are based

on 'smart' central controllers and external control of 'dumb' processes,

the Hive is based on 'dumb' workflow graphs and job kiosk, and 'smart' workers

(autonomous agents) who are self configuring and figure out for themselves what

needs to be done, and then do it. The workers are based around a set of

emergent behaviour rules which allow a predictible system behaviour to emerge

from what otherwise might appear at first glance to be a chaotic system. There

is an inherent asynchronous disconnect between one worker and the next.

Work (or jobs) are simply 'posted' on a blackboard or kiosk within the hive

database where other workers can find them.

The emergent behaviour rules of a worker are:

1) If a job is posted, someone needs to do it.

2) Don't grab something that someone else is working on

3) Don't grab more than you can handle

4) If you grab a job, it needs to be finished correctly

5) Keep busy doing work

6) If you fail, do the best you can to report back

For further reading on the AI principles employed in this design see:

http://en.wikipedia.org/wiki/Autonomous_Agent

http://en.wikipedia.org/wiki/Emergence

Member Function Documentation

◆ cleanup_worker_temp_directory()

| public Bio::EnsEMBL::Hive::Process::cleanup_worker_temp_directory | ( | ) |

Title : cleanup_worker_temp_directory

Function: Cleans up the directory on the local /tmp disk that is used for the

worker. It can be used to remove files left there by previous jobs.

Usage : $self->cleanup_worker_temp_directory; Code:

Code:

click to view

◆ complete_early()

| public Bio::EnsEMBL::Hive::Process::complete_early | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ data_dbc()

| public Bio::EnsEMBL::Hive::DBSQL::DBConnection Bio::EnsEMBL::Hive::Process::data_dbc | ( | ) |

Title : data_dbc

Usage : my $data_dbc = $self->data_dbc;

Function: returns a Bio::EnsEMBL::Hive::DBSQL::DBConnection object (the "current" one by default, but can be set up otherwise)

Returns : Bio::EnsEMBL::Hive::DBSQL::DBConnection Code:

Code:

click to view

◆ dataflow_output_id()

| public Bio::EnsEMBL::Hive::Process::dataflow_output_id | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ db()

| public Bio::EnsEMBL::Hive::DBSQL::DBAdaptor Bio::EnsEMBL::Hive::Process::db | ( | ) |

Title : db

Usage : my $hiveDBA = $self->db;

Function: returns DBAdaptor to Hive database

Returns : Bio::EnsEMBL::Hive::DBSQL::DBAdaptor Code:

Code:

click to view

◆ dbc()

| public Bio::EnsEMBL::Hive::DBSQL::DBConnection Bio::EnsEMBL::Hive::Process::dbc | ( | ) |

Title : dbc

Usage : my $hiveDBConnection = $self->dbc;

Function: returns DBConnection to Hive database

Returns : Bio::EnsEMBL::Hive::DBSQL::DBConnection Code:

Code:

click to view

◆ debug()

| public Int Bio::EnsEMBL::Hive::Process::debug | ( | ) |

Title : debug

Function: Gets/sets flag for debug level. Set through Worker/runWorker.pl

Subclasses should treat as a read_only variable.

Returns : integer Code:

Code:

click to view

◆ enter_status()

| public Bio::EnsEMBL::Hive::Process::enter_status | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ execute_writes()

| public Boolean Bio::EnsEMBL::Hive::Process::execute_writes | ( | ) |

Title : execute_writes

Usage : $self->execute_writes( 1 );

Function: getter/setter for whether we want the 'write_output' method to be run

Returns : boolean Code:

Code:

click to view

◆ fetch_input()

| public Bio::EnsEMBL::Hive::Process::fetch_input | ( | ) |

Title : fetch_input

Function: sublcass can implement functions related to data fetching.

Typical acivities would be to parse $self->input_id .

Subclasses may also want to fetch data from databases

or from files within this function. Code:

Code:

click to view

◆ input_id()

| public Bio::EnsEMBL::Hive::Process::input_id | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ input_job()

| public Bio::EnsEMBL::Hive::AnalysisJob Bio::EnsEMBL::Hive::Process::input_job | ( | ) |

Title : input_job

Function: Returns the AnalysisJob to be run by this process

Subclasses should treat this as a read_only object.

Returns : Bio::EnsEMBL::Hive::AnalysisJob object Code:

Code:

click to view

◆ life_cycle()

| public Bio::EnsEMBL::Hive::Process::life_cycle | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ new()

| public Bio::EnsEMBL::Hive::Process::new | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ param()

| public Bio::EnsEMBL::Hive::Process::param | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ param_defaults()

| public Bio::EnsEMBL::Hive::Process::param_defaults | ( | ) |

Title : param_defaults

Function: sublcass can define defaults for all params used by the RunnableDB/Process Code:

Code:

click to view

◆ param_is_defined()

| public Bio::EnsEMBL::Hive::Process::param_is_defined | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ param_required()

| public Bio::EnsEMBL::Hive::Process::param_required | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ param_substitute()

| public Bio::EnsEMBL::Hive::Process::param_substitute | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ run()

| public Bio::EnsEMBL::Hive::Process::run | ( | ) |

Title : run

Function: sublcass can implement functions related to process execution.

Typical activities include running external programs or running

algorithms by calling perl methods. Process may also choose to

parse results into memory if an external program was used. Code:

Code:

click to view

◆ say_with_header()

| public Bio::EnsEMBL::Hive::Process::say_with_header | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ throw()

| public Bio::EnsEMBL::Hive::Process::throw | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ warning()

| public Bio::EnsEMBL::Hive::Process::warning | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ worker()

| public Bio::EnsEMBL::Hive::Worker Bio::EnsEMBL::Hive::Process::worker | ( | ) |

Title : worker

Usage : my $worker = $self->worker;

Function: returns the Worker object this Process is run by

Returns : Bio::EnsEMBL::Hive::Worker Code:

Code:

click to view

◆ worker_temp_directory()

| public Bio::EnsEMBL::Hive::Process::worker_temp_directory | ( | ) |

Title : worker_temp_directory

Function: Returns a path to a directory on the local /tmp disk

which the subclass can use as temporary file space.

This directory is made the first time the function is called.

It persists for as long as the worker is alive. This allows

multiple jobs run by the worker to potentially share temp data.

For example the worker (which is a single Analysis) might need

to dump a datafile file which is needed by all jobs run through

this analysis. The process can first check the worker_temp_directory

for the file and dump it if it is missing. This way the first job

run by the worker will do the dump, but subsequent jobs can reuse the

file.

Usage : $tmp_dir = $self->worker_temp_directory;

Returns : <string> path to a local (/tmp) directory Code:

Code:

click to view

◆ worker_temp_directory_name()

| public Bio::EnsEMBL::Hive::Process::worker_temp_directory_name | ( | ) |

Undocumented method

Code:

Code:

click to view

◆ write_output()

| public Bio::EnsEMBL::Hive::Process::write_output | ( | ) |

Title : write_output

Function: sublcass can implement functions related to storing results.

Typical activities including writing results into database tables

or into files on a shared filesystem. Code:

Code:

click to view

The documentation for this class was generated from the following file:

- modules/Bio/EnsEMBL/Hive/Process.pm