|

|

|

|

|

Pytorch-nmt

|

Vanilla MLE and RAML implementation |

|

|

QRNN.pytorch

|

PyTorch implementation of the Quasi-Recurrent Neural Network - up to 16 times faster than NVIDIA's cuDNN LSTM |

|

|

Dynamic-Evaluation.pytorch

|

Dynamic evaluation for pytorch language models, now includes hyperparameter tuning |

|

|

Breaking the softmax Bottleneck.pytorch

|

Implementation of Breaking the Softmax Bottleneck: A High-Rank Language Model |

|

|

Review Networks.Torch

|

Review Network for Caption Generation |

|

|

Words or Characters gating.theano

|

Fine-grained Gating for Reading Comprehension |

|

|

FastText

|

Library for fast text representation and classification. |

|

|

Sent2Vec

|

General purpose unsupervised sentence representations |

|

|

NMT.Pytroch

|

pytorch attentional NMT(with NLL, MRT, REINFORCE,

MIXER training objectives) |

|

|

Subword-NMT

preprocessing

|

This repository contains preprocessing scripts to

segment text into subword units. The primary purpose is

to facilitate the reproduction of our experiments on

Neural Machine Translation with subword units |

|

|

BSO

|

Code for Sequence-to-Sequence Learning as Beam-Search

Optimization (Wiseman and Rush, 2016). |

|

|

MIXER.torch

|

Mixed Incremental Cross-Entropy REINFORCE ICLR

2016 |

|

|

MUSE.pytorch

|

A library for Multilingual Unsupervised or Supervised

word Embeddings |

|

|

Structured-Self-Attentive-Sentence-Embedding.pytorch

|

An open-source implementation of the paper A

Structured Self-Attentive Sentence Embedding published by

IBM and MILA. Requires spaCy as well. |

|

|

nmt.pytorch

|

Neural Machine Translation Framework in PyTorch |

|

|

NeuralDialog-CVAE.pytorch

|

Knowledge-Guided CVAE for dialog generation |

|

|

bandit-nmt.pytorch

|

This is code repo for our EMNLP 2017 paper

"Reinforcement Learning for Bandit Neural Machine

Translation with Simulated Human Feedback", which

implements the A2C algorithm on top of a neural

encoder-decoder model and benchmarks the combination

under simulated noisy rewards. |

|

|

Diverse

Beam Search.torch

|

This code implements Diverse Beam Search (DBS) - a

replacement for beam search that generates diverse

sequences from sequence models like LSTMs. This

repository lets you generate diverse image-captions for

models trained using the popular neuraltalk2 repository.

A demo of our implementation on captioning is available

at dbs.cloudcv.org |

|

|

SentEval.pytorch

|

SentEval is a library for evaluating the quality of

sentence embeddings. We assess their generalization power

by using them as features on a broad and diverse set of

"transfer" tasks (more details here). Our goal is to ease

the study and the development of general-purpose

fixed-size sentence representations. |

|

|

SRU.pytorch

|

Training RNNs as Fast as CNNs

(https://arxiv.org/abs/1709.02755) |

|

|

Listen-Attend-and-Spell-Pytorch

|

Listen Attend and Spell (LAS) implement in

pytorch |

|

|

rnn.wgan.Tensorflow

|

Code for training and evaluation of the model from

"Language Generation with Recurrent Generative

Adversarial Networks without Pre-training"

https://arxiv.org/abs/1706.01399 |

|

|

InferSent.pytorch

|

Sentence embeddings (InferSent) and training code for

NLI. |

|

|

fairseq.Torch

|

Facebook AI Research Sequence-to-Sequence

Toolkit |

|

|

wordemb.PyTorch

|

Load pretrained word embeddings (word2vec, glove

format) into torch.FloatTensor for PyTorch |

|

|

seq2seq-attn.Torch

|

Sequence-to-sequence model with LSTM encoder/decoders

and attention |

|

|

gated-attention-reader.PyTorch

|

Tensorflow/Pytorch implementation of Gated Attention

Reader |

|

|

glove.python

|

Toy Python implementation of

http://www-nlp.stanford.edu/projects/glove/ |

|

|

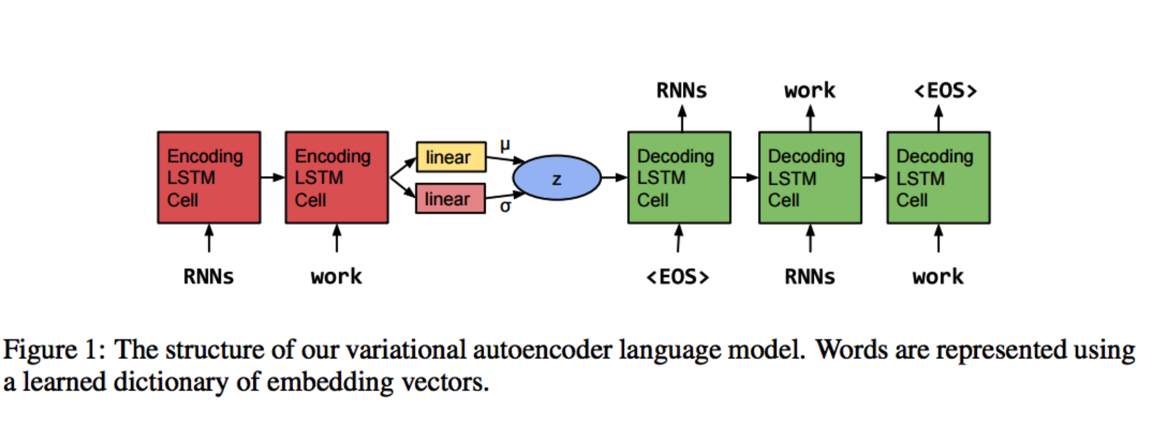

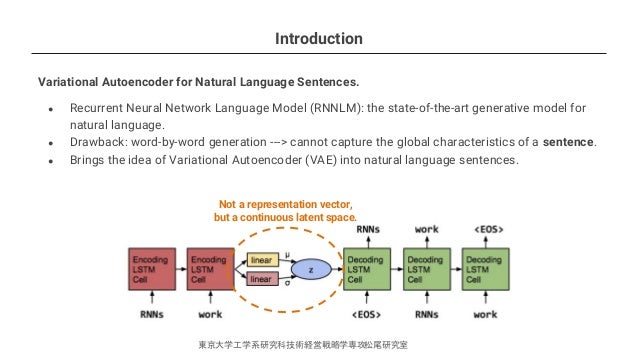

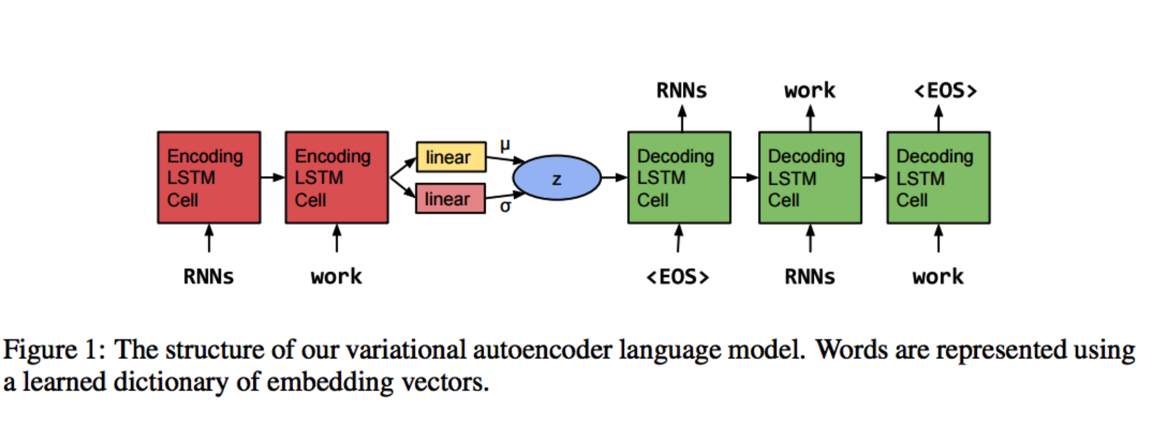

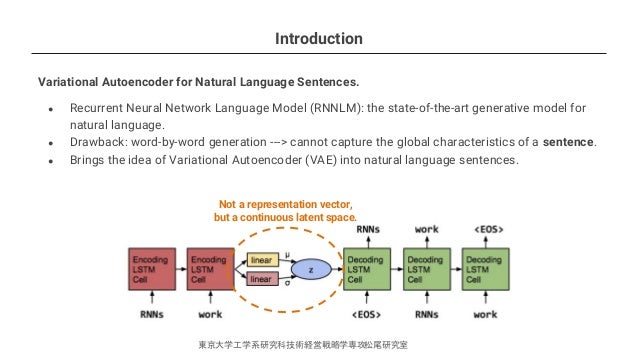

Variational-LSTM-Autoencoder.Torch

|

Variational Seq2Seq model |

|

|

Hard-Aware-Deeply-Cascaed-Embedding.c++

|

source code for the paper

"Hard-Aware-Deeply-Cascaed-Embedding" |

|

|

seq2seq-intent-parsing.PyTorch

|

Intent parsing and slot filling in PyTorch with

seq2seq + attention |

|

|

diverse-beam-search.Torch

|

|

|

|

sentiment-neuron.PyTorch

|

Pytorch version of

generating-reviews-discovering-sentiment :

https://github.com/openai/generating-reviews-discovering-sentiment |

|

|

actor-critic-public.theano

|

The source code for "An Actor Critic Algorithm for

Structured Prediction" |

|

|

TorchGlove.PyTorch

|

PyTorch implementation of Global Vectors for Word

Representation.

http://suriyadeepan.github.io/2016-06-28-easy-seq2seq/ |

|

|

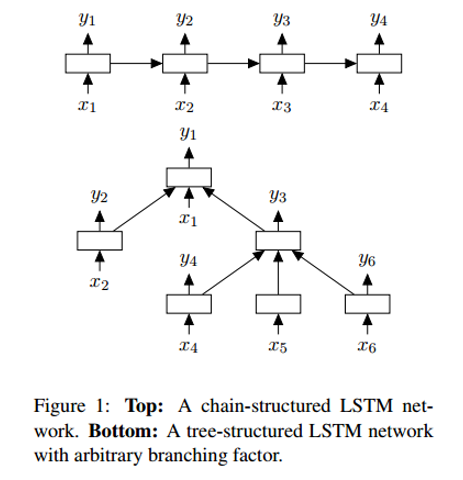

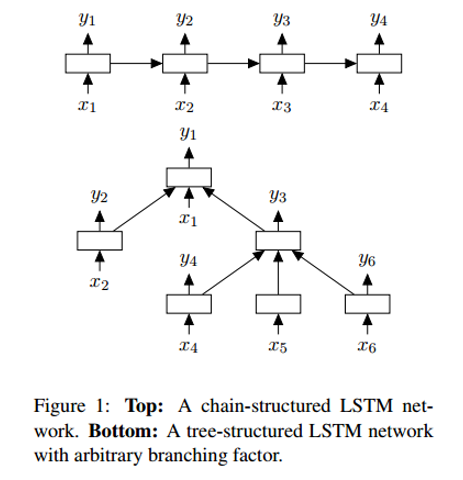

TreeLSTM.PyTorch

|

An attempt to implement the Constinuency Tree LSTM in

"Improved Semantic Representations From Tree-Structured

Long Short-Term Memory Networks" |

|

|

treelstm.pytorch

|

A PyTorch based implementation of Tree-LSTM from Kai

Sheng Tai's paper Improved Semantic Representations From

Tree-Structured Long Short-Term Memory Networks. |

|

|

OpenNMT.PyTorch

|

This is a Pytorch port of OpenNMT, an open-source

(MIT) neural machine translation system. Full

documentation is available here. |

|

|

seq2seq.pytorch

|

This is a complete suite for training

sequence-to-sequence models in PyTorch. It consists of

several models and code to both train and infer using

them. |

|

|

attention-is-all-you-need.pytorch

|

This is a PyTorch implementation of the Transformer

model in "Attention is All You Need" (Ashish Vaswani,

Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones,

Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin, arxiv,

2017). |

|

|

nmt-seq2seq.PyTorch

|

seq2seq model written in Pytorch |

|

|

seq2seq.Tensorflow

|

Attention-based sequence to sequence learning |

|

|

DenseContinuousSentances.Tensorflow

|

Working towards implementing Generating Sentences

from a Continuous Space but with DenseNet |

|

|

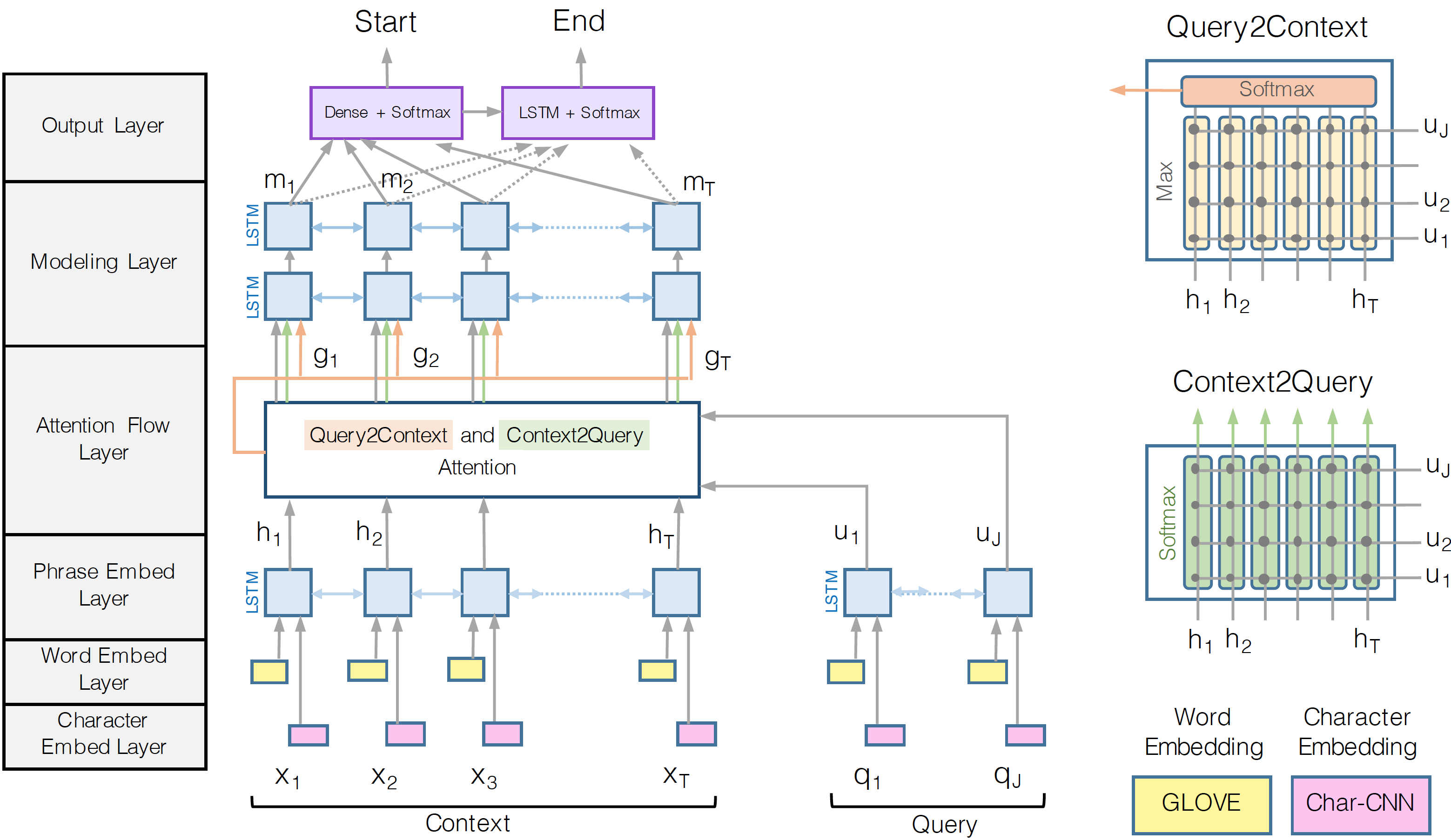

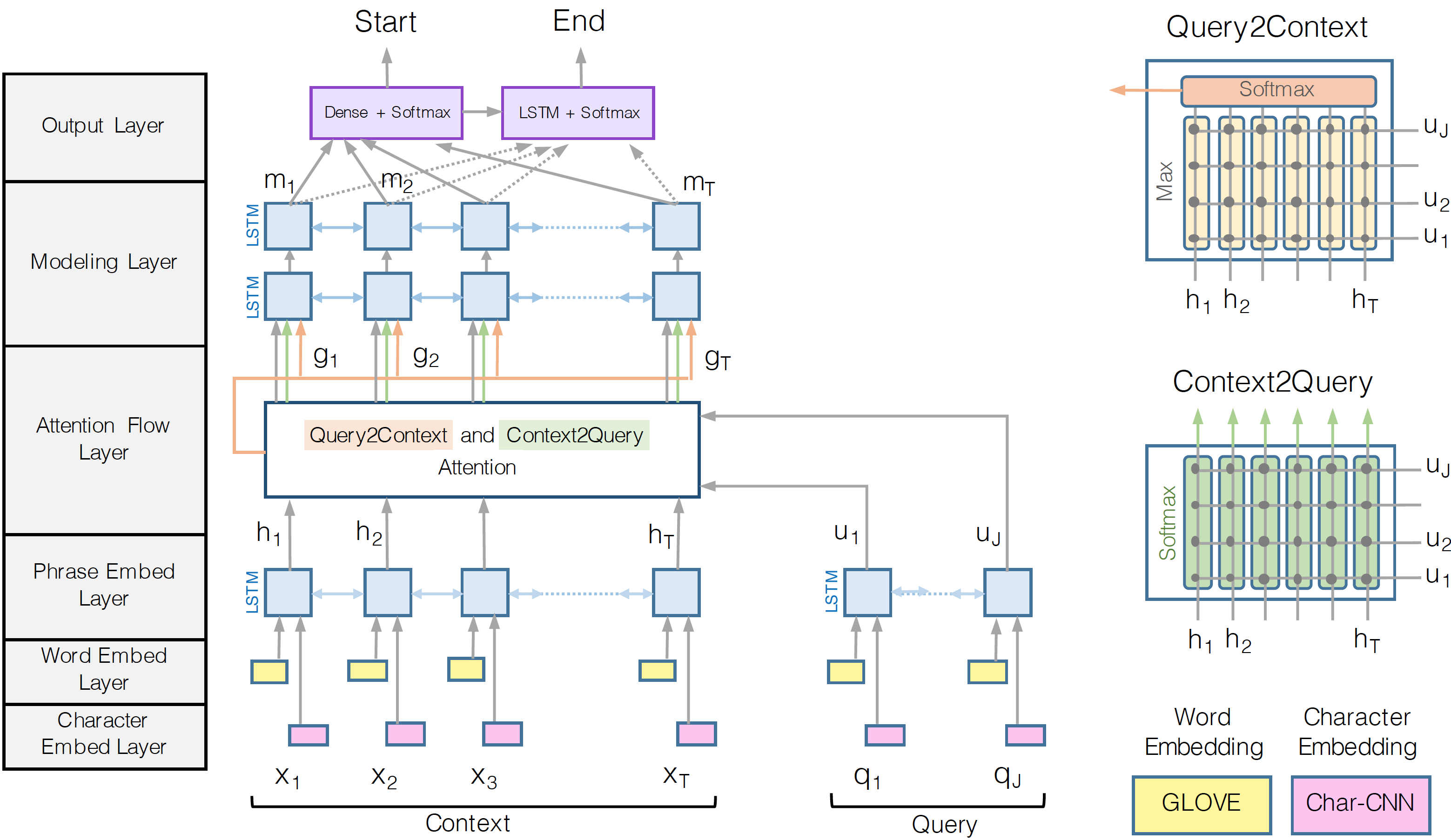

bi-att-flow.Tensorflow

|

Bidirectional Attention Flow |

|