title: “Quantitative Big Imaging” author: “Statistics, Prediction, and Reproducibility” date: ‘ETHZ: 227-0966-00L’ output: slidy_presentation: footer: “Quantitative Big Imaging 2017” —

Course Outline

source('../common/schedule.R')- 23th February - Introduction and Workflows

- 2rd March - Image Enhancement (A. Kaestner)

- 9th March - Tutorial on Python and Jupyter

- 16th March - Basic Segmentation, Discrete Binary Structures

- 23th March - Advanced Segmentation

- 30th March - Analyzing Single Objects

- 6th April - Analyzing Complex Objects

- 13th April - Many Objects and Distributions

- 27th April - Statistics, Prediction, and Reproducibility

- 4th May - Dynamic Experiments

- 11th May - Scaling Up / Big Data

- 18th May - Guest Lecture - High Content Screening (M. Prummer) / Project Presentations

- 1st June - Guest Lecture - Big Aerial Images with Deep Learning and More Advanced Approaches (J. Montoya)

Literature / Useful References

Books

- Jean Claude, Morphometry with R

- Online through ETHZ

- Chapter 3

- Buy it

- John C. Russ, “The Image Processing Handbook”,(Boca Raton, CRC Press)

- Available online within domain ethz.ch (or proxy.ethz.ch / public VPN)

- Hypothesis Testing Chapter

- Grammar of Graphics: Leland and Wilkinson - http://www.springer.com/gp/book/9780387245447

Videos / Podcasts

- Google/Stanford Statistics Intro

- https://www.youtube.com/watch?v=YFC2KUmEebc

- MCB 140 P-value lecture at UC Berkeley (Audio)

- https://itunes.apple.com/us/itunes-u/mcb-140-fall-2007-general/id461120088?mt=10

- Correlation and Causation (Video)

- https://www.youtube.com/watch?v=YFC2KUmEebc

- Last Week Tonight: Scientific Studies (https://www.youtube.com/watch?v=0Rnq1NpHdmw)

Papers / Sites

- Matlab Unit Testing Documentation

- Databases Introduction

- Visualizing Genomic Data (General Visualization Techniques)

- M.E. Wolak, D.J. Fairbairn, Y.R. Paulsen (2012) Guidelines for Estimating Repeatability. Methods in Ecology and Evolution 3(1):129-137.

David J.C. MacKay, Bayesian Interpolartion (1991) [http://citeseer.ist.psu.edu/viewdoc/summary?doi=10.1.1.27.9072]

Model Evaluation

Iris Dataset

- The Iris dataset was used in Fisher’s classic 1936 paper, The Use of Multiple Measurements in Taxonomic Problems: http://rcs.chemometrics.ru/Tutorials/classification/Fisher.pdf

Previously on QBI …

- Image Enhancment

- Highlighting the contrast of interest in images

- Minimizing Noise

- Understanding image histograms

- Automatic Methods

- Component Labeling

- Single Shape Analysis

- Complicated Shapes

- Distribution Analysis

Quantitative “Big” Imaging

The course has covered imaging enough and there have been a few quantitative metrics, but “big” has not really entered.

What does big mean?

- Not just / even large

- it means being ready for big data

- volume, velocity, variety (3 V’s)

- scalable, fast, easy to customize

So what is “big” imaging

doing analyses in a disciplined manner

- fixed steps

- easy to regenerate results

- no magic

having everything automated

- 100 samples is as easy as 1 sample

being able to adapt and reuse analyses

- one really well working script and modify parameters

- different types of cells

- different regions

Objectives

- Scientific Studies all try to get to a single number

- Make sure this number is describing the structure well (what we have covered before)

- Making sure the number is meaningful (today!)

- How do we compare the number from different samples and groups?

- Within a sample or same type of samples

- Between samples

- How do we compare different processing steps like filter choice, minimum volume, resolution, etc?

- How do we evaluate our parameter selection?

- How can we ensure our techniques do what they are supposed to do?

- How can we visualize so much data? Are there rules?

Outline

- Motivation (Why and How?)

- Scientific Goals

- Reproducibility

- Predicting and Validating

- Statistical metrics and results

- Parameterization

- Parameter sweep

- Sensitivity analysis

- Unit Testing

- Visualization

What do we start with?

Going back to our original cell image

- We have been able to get rid of the noise in the image and find all the cells (lecture 2-4)

- We have analyzed the shape of the cells using the shape tensor (lecture 5)

- We even separated cells joined together using Watershed (lecture 6)

- We have created even more metrics characterizing the distribution (lecture 7)

We have at least a few samples (or different regions), large number of metrics and an almost as large number of parameters to tune

How do we do something meaningful with it?

Correlation and Causation

One of the most repeated criticisms of scientific work is that correlation and causation are confused.

- Correlation

- means a statistical relationship

- very easy to show (single calculation)

- Causation

- implies there is a mechanism between A and B

- very difficult to show (impossible to prove)

Controlled and Observational

There are two broad classes of data and scientific studies.

Observational

- Exploring large datasets looking for trends

- Population is random

- Not always hypothesis driven

- Rarely leads to causation

We examined 100 people and the ones with blue eyes were on average 10cm taller

In 100 cake samples, we found a 0.9 correlation between cooking time and bubble size

Controlled

- Most scientific studies fall into this category

- Specifics of the groups are controlled

- Can lead to causation

We examined 50 mice with gene XYZ off and 50 gene XYZ on and as the foot size increased by 10%

We increased the temperature and the number of pores in the metal increased by 10%

Simple Model: Magic / Weighted Coin

incremental: true

Since most of the experiments in science are usually specific, noisy, and often very complicated and are not usually good teaching examples

- Magic / Biased Coin

- You buy a magic coin at a shop

- How many times do you need to flip it to prove it is not fair?

- If I flip it 10 times and another person flips it 10 times, is that the same as 20 flips?

- If I flip it 10 times and then multiple the results by 10 is that the same as 100 flips?

- If I buy 10 coins and want to know which ones are fair what do I do?

Simple Model: Magic / Weighted Coin

- Each coin represents a stochastic variable 𝒳 and each flip represents an observation 𝒳i.

- The act of performing a coin flip ℱ is an observation 𝒳i = ℱ(𝒳)

We normally assume

- A fair coin has an expected value of E(𝒳)=0.5→ 50% Heads, 50% Tails

- An unbiased flip(er) means

- each flip is independent of the others

P(ℱ1(𝒳)*ℱ2(𝒳)) = P(ℱ1(𝒳)) * P(ℱ2(𝒳))

- the expected value of the flip is the same as that of the coin

$$ E(\prod_{i=0}^\infty \mathcal{F}_i(\mathcal{X})) = E(\mathcal{X}) $$

Simple Model to Reality

Coin Flip

- Each flip gives us a small piece of information about the flipper and the coin

- More flips provides more information

- Random / Stochastic variations in coin and flipper cancel out

- Systematic variations accumulate

Real Experiment

- Each measurement tells us about our sample, out instrument, and our analysis

- More measurements provide more information

- Random / Stochastic variations in sample, instrument, and analysis cancel out

- Normally the analysis has very little to no stochastic variation

- Systematic variations accumulate

Iris: A more complicated model

Coin flips are very simple and probably difficult to match to another experiment. A very popular dataset for learning about such values beyond ‘coin-flips’ is called the Iris dataset which covers a number of measurements from different plants and the corresponding species.

iris %>% sample_n(5) %>% kable| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | |

|---|---|---|---|---|---|

| 126 | 7.2 | 3.2 | 6.0 | 1.8 | virginica |

| 13 | 4.8 | 3.0 | 1.4 | 0.1 | setosa |

| 75 | 6.4 | 2.9 | 4.3 | 1.3 | versicolor |

| 68 | 5.8 | 2.7 | 4.1 | 1.0 | versicolor |

| 39 | 4.4 | 3.0 | 1.3 | 0.2 | setosa |

iris %>%

mutate(plant.id=1:nrow(iris)) %>%

melt(id.vars=c("Species","plant.id"))->flat_iris

flat_iris %>%

merge(flat_iris,by=c("Species","plant.id")) %>%

ggplot(aes(value.x,value.y,color=Species)) +

geom_jitter()+

facet_grid(variable.x~variable.y,scales="free")+

theme_bw(10)Reproducibility

A very broad topic with plenty of sub-areas and deeper meanings. We mean two things by reproducibility

Analysis

The process of going from images to numbers is detailed in a clear manner that anyone, anywhere could follow and get the exact (within some tolerance) same numbers from your samples

- No platform dependence

- No proprietary or “in house” algorithms

- No manual clicking, tweaking, or copying

- One script to go from image to result

Measurement

Everything for analysis + taking a measurement several times (noise and exact alignment vary each time) does not change the statistics significantly

- No sensitivity to mounting or rotation

- No sensitivity to noise

- No dependence on exact illumination

Reproducible Analysis

The basis for reproducible scripts and analysis are scripts and macros. Since we will need to perform the same analysis many times to understand how reproducible it is.

IMAGEFILE=$1

THRESHOLD=130

matlab -r "inImage=$IMAGEFILE; threshImage=inImage>$THRESHOLD; analysisScript;"- or

java -jar ij.jar -macro TestMacro.ijm blobs.tif - or

Rscript -e "library(plyr);..."

Comparing Groups: Intraclass Correlation Coefficient

The intraclass correlation coefficient basically looking at how similar objects within a group are compared to between groups

ggplot(iris,aes(x=Species,y=Sepal.Width))+

geom_boxplot()+

geom_jitter()+

labs(x="Species",y="Sepal Width",title="Low Group Similarity")+

theme_bw(20)ggplot(iris,aes(x=Species,y=Petal.Length))+

geom_boxplot()+

geom_jitter()+

labs(x="Species",y="Petal Length",title="High Group Similarity")+

theme_bw(20)Intraclass Correlation Coefficient Definition

$$ ICC = \frac{S_A^2}{S_A^2+S_W^2} $$

where

- SA2 is the variance among groups or classes

- Estimate with the standard deviations of the mean values for each group

- SW2 is the variance within groups or classes.

Estimate with the average of standard deviations for each group

- 1 means 100% of the variance is between classes

0 means 0% of the variance is between classes

Intraclass Correlation Coefficient: Values

library("ICC")

icVal<-ICCbare(Species,Sepal.Width,data=iris)

ggplot(iris,aes(x=Species,y=Sepal.Width))+

geom_boxplot()+

geom_jitter()+

labs(x="Species",y="Sepal Width",title=sprintf("Low Group Similarity\n ICC:%2.2f",icVal))+

theme_bw(20)icVal<-ICCbare(Species,Petal.Length,data=iris)

ggplot(iris,aes(x=Species,y=Petal.Length))+

geom_boxplot()+

geom_jitter()+

labs(x="Species",y="Sepal Width",title=sprintf("High Group Similarity\n ICC:%2.2f",icVal))+

theme_bw(20)Intraclass Correlation Coefficient: Values for Coin-Flips

We have one biased coin and try to figure out how many flips we need for the ICC to tell the difference to the normal coin

name.list<-c("Coin A","Coin B")

test.data<-plyr::ldply(c(1:length(name.list)),function(class.id) {

data.frame(name=name.list[class.id],values=runif(100,max=1+2*(class.id-1))>0.5)

})

icVal<-ICCbare(name,values,data=test.data)

ggplot(test.data,aes(x=name,y=values))+

geom_jitter()+

labs(x="Groups",y="Value",title=sprintf("100 flips\n ICC:%2.2f",icVal))+

theme_bw(20)With many thousands of flips we eventually see a very strong difference but unless it is very strongly biased ICC is a poor indicator for the differences

name.list<-c("Coin A","Coin B")

test.data<-plyr::ldply(c(1:length(name.list)),function(class.id) {

data.frame(name=name.list[class.id],values=runif(20000,max=1+2*(class.id-1))>0.5)

})

icVal<-ICCbare(name,values,data=test.data)

ggplot(test.data,aes(x=name,y=values))+

geom_jitter()+

labs(x="Groups",y="Value",title=sprintf("20,000 flips\n ICC:%2.2f",icVal))+

theme_bw(20)Comparing Groups: Tests

Once the reproducibility has been measured, it is possible to compare groups. The idea is to make a test to assess the likelihood that two groups are the same given the data

- List assumptions

- Establish a null hypothesis

- Usually both groups are the same

- Calculate the probability of the observations given the truth of the null hypothesis

- Requires knowledge of probability distribution of the data

- Modeling can be exceptionally complicated

Loaded Coin

We have 1 coin from a magic shop

- our assumptions are

- we flip and observe flips of coins accurately and independently

- the coin is invariant and always has the same expected value

- our null hypothesis is the coin is unbiased E(𝒳)=0.5

- we can calculate the likelihood of a given observation given the number of flips (p-value)

n.flips<-c(1,5,10)

cf.table<-data.frame(No.Flips=n.flips,PAH=paste(round(1000*0.5^n.flips)/10,"%"))

names(cf.table)<-c("Number of Flips","Probability of All Heads Given Null Hypothesis (p-value)")

kable(cf.table)| Number of Flips | Probability of All Heads Given Null Hypothesis (p-value) |

|---|---|

| 1 | 50 % |

| 5 | 3.1 % |

| 10 | 0.1 % |

How good is good enough?

Comparing Groups: Student’s T Distribution

Since we do not usually know our distribution very well or have enough samples to create a sufficient probability model

Student T Distribution

We assume the distribution of our stochastic variable is normal (Gaussian) and the t-distribution provides an estimate for the mean of the underlying distribution based on few observations.

- We estimate the likelihood of our observed values assuming they are coming from random observations of a normal process

Student T-Test

Incorporates this distribution and provides an easy method for assessing the likelihood that the two given set of observations are coming from the same underlying process (null hypothesis)

- Assume unbiased observations

- Assume normal distribution

Multiple Testing Bias

Back to the magic coin, let’s assume we are trying to publish a paper, we heard a p-value of < 0.05 (5%) was good enough. That means if we get 5 heads we are good!

n.flips<-c(1,4,5)

cf.table<-data.frame(No.Flips=n.flips,PAH=paste(round(1000*0.5^n.flips)/10,"%"))

names(cf.table)<-c("Number of Flips","Probability of All Heads Given Null Hypothesis (p-value)")

kable(cf.table)| Number of Flips | Probability of All Heads Given Null Hypothesis (p-value) |

|---|---|

| 1 | 50 % |

| 4 | 6.2 % |

| 5 | 3.1 % |

n.friends<-c(1,10,20,40,80)

cfr.table<-data.frame(No.Friends=n.friends,PAH=paste(round((1000*(1-(1-0.5^5)^n.friends)))/10,"%"))

names(cfr.table)<-c("Number of Friends Flipping","Probability Someone Flips 5 heads")

kable(cfr.table)| Number of Friends Flipping | Probability Someone Flips 5 heads |

|---|---|

| 1 | 3.1 % |

| 10 | 27.2 % |

| 20 | 47 % |

| 40 | 71.9 % |

| 80 | 92.1 % |

Clearly this is not the case, otherwise we could keep flipping coins or ask all of our friends to flip until we got 5 heads and publish

The p-value is only meaningful when the experiment matches what we did.

- We didn’t say the chance of getting 5 heads ever was < 5%

- We said if we have exactly 5 observations and all of them are heads the likelihood that a fair coin produced that result is <5%

Many methods to correct, most just involve scaling p. The likelihood of a sequence of 5 heads in a row if you perform 10 flips is 5x higher.

Multiple Testing Bias: Experiments

This is very bad news for us. We have the ability to quantify all sorts of interesting metrics

- cell distance to other cells

- cell oblateness

- cell distribution oblateness

So lets throw them all into a magical statistics algorithm and push the publish button

With our p value of less than 0.05 and a study with 10 samples in each group, how does increasing the number of variables affect our result

make.random.data<-function(n.groups=2,n.samples=10,n.vars=1,rand.fun=runif,group.off.fun=function(grp.id) 0) {

ldply(1:n.groups,function(c.group) {

data.frame(group=c.group,

do.call(cbind,llply(1:n.vars,function(c.var) group.off.fun(c.group)+rand.fun(n.samples)))

)

})

}

# only works for two groups

all.t.test<-function(in.data) {

group1<-subset(in.data,group==1)[,-1,drop=F]

group2<-subset(in.data,group==2)[,-1,drop=F]

ldply(1:ncol(group1),function(var.id) {

tres<-t.test(group1[,var.id],group2[,var.id])

data.frame(var.col=var.id,

p.val=tres$p.value,

method=tres$method,

var.count=ncol(group1),

sample.count=nrow(in.data))

}

)

}

# run the entire analysis several times to get an average

test.random.data<-function(n.test=10,...) {

ldply(1:n.test,function(c.test) cbind(test.num=c.test,all.t.test(make.random.data(...))))

}var.range<-round(seq(1,60,length.out=15))

test.cnt<-80

sim.data<-ldply(var.range,function(n.vars) test.random.data(test.cnt,n.vars=n.vars))

sig.likelihood<-ddply(sim.data,.(var.count),function(c.tests) {

data.frame(sig.vars=nrow(subset(c.tests,p.val<=0.05))/length(unique(c.tests$test.num)))

})ggplot(sig.likelihood,

aes(x=var.count,y=sig.vars))+

geom_point()+geom_line()+

labs(x="Number of Variables in Study",y="Number of Significant \n (P<0.05) Findings")+

theme_bw(20)Multiple Testing Bias: Correction

Using the simple correction factor (number of tests performed), we can make the significant findings constant againsig.likelihood.corr<-ddply(sim.data,.(var.count),function(c.tests) {

data.frame(sig.vars=nrow(subset(c.tests,p.val<=0.05/var.count))/length(unique(c.tests$test.num)))

})

ggplot(sig.likelihood.corr,

aes(x=var.count,y=sig.vars))+

geom_point()+geom_line(aes(color="Corrected"))+

geom_point(data=sig.likelihood)+

geom_line(data=sig.likelihood,aes(color="Non-Corrected"))+

geom_hline(yintercept=0.05,color="green",alpha=0.4,size=2)+

scale_y_sqrt()+

labs(x="Number of Variables in Study",y="Number of Significant \n (P<0.05) Findings")+

theme_bw(20)So no harm done there we just add this correction factor right? Well what if we have exactly one variable with shift of 1.0 standard deviations from the other.

var.range<-round(seq(10,60,length.out=10))

test.cnt<-100

one.diff.sample<-function(grp.id) ifelse(grp.id==2,.10,0)

sim.data.diff<-ldply(var.range,function(n.samples)

test.random.data(test.cnt,n.samples=n.samples,

rand.fun=function(n.cnt) rnorm(n.cnt,mean=1,sd=0.1),

group.off.fun=one.diff.sample))ggplot(sim.data.diff,aes(x=sample.count,y=p.val))+

geom_point()+

geom_smooth(aes(color=" 1 Variable"))+

geom_hline(yintercept=0.05,color="green",alpha=0.4,size=2)+

labs(x="Number of Samples in Study",y="P-Value for a 10% Difference")+

theme_bw(20)Multiple Testing Bias: Sample Size

var.range<-c(1,5,10,20,100) # variable count

sim.data.psig<-ldply(var.range,function(c.vcnt) {

cbind(var.count=c.vcnt,ddply(sim.data.diff,.(sample.count),function(c.sample)

data.frame(prob.sig=nrow(subset(c.sample,p.val<=0.05/c.vcnt))/nrow(c.sample))

))

})ggplot(sim.data.psig,aes(x=sample.count,y=100*prob.sig))+

geom_line(aes(color=as.factor(var.count)),size=2)+

ylim(0,100)+

labs(x="Number of Samples in Study",y="Probability of Finding\n Significant Variable (%)",color="Variables")+

theme_bw(20)Predicting and Validating

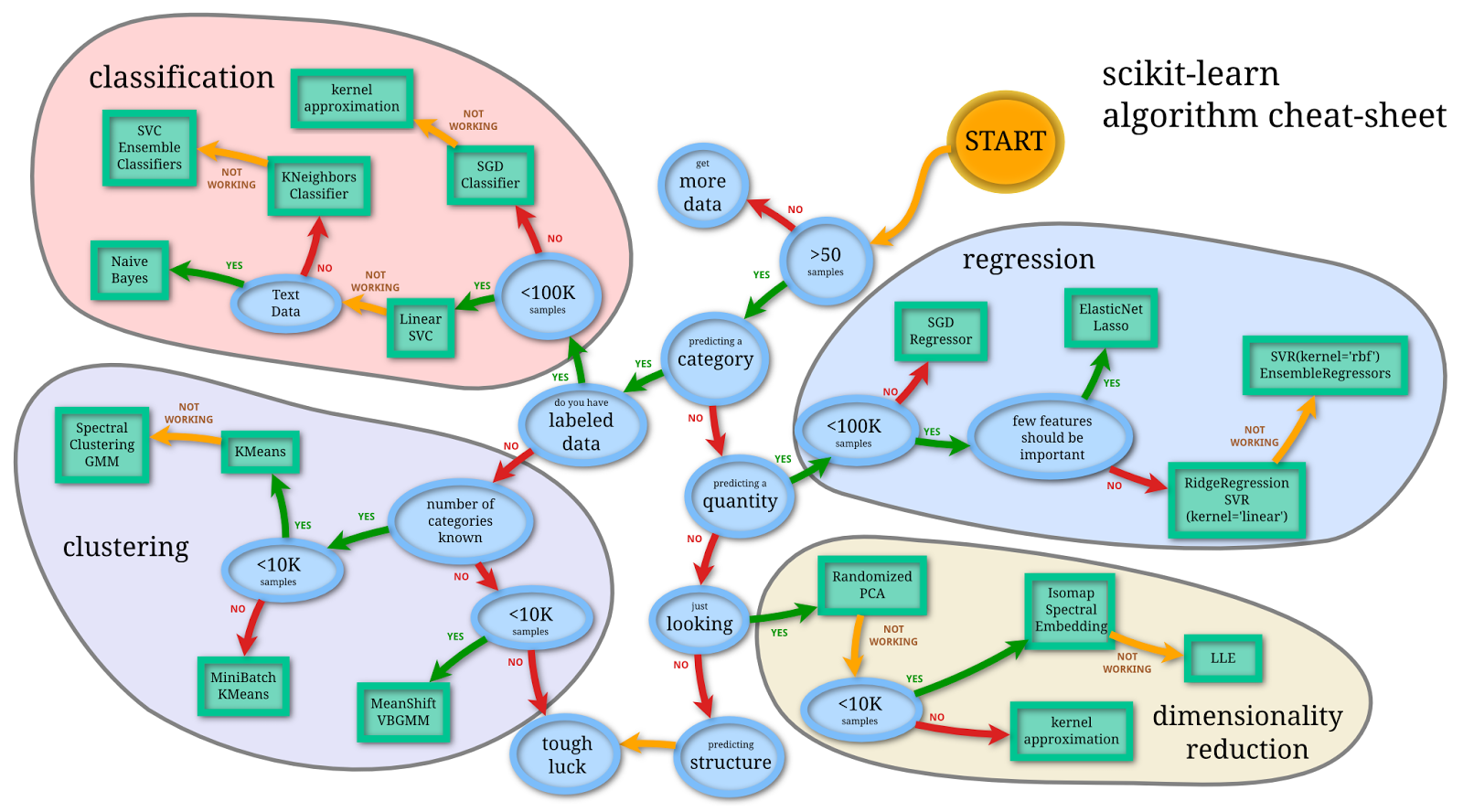

- Borrowed from http://peekaboo-vision.blogspot.ch/2013/01/machine-learning-cheat-sheet-for-scikit.html

Main Categories

- Classification

- Regression

- Clustering

- Dimensionality Reduction

Overview

Basically all of these are ultimately functions which map inputs to outputs.

The input could be

- an image

- a point

- a feature vector

- or a multidimensional tensor

The output is

- a value (regression)

- a classification (classification)

- a group (clustering)

- a vector / matrix / tensor with fewer degrees of input / less noise as the original data (dimensionality reduction)

Overfitting

The most serious problem with machine learning and such approachs is overfitting your model to your data. Particularly as models get increasingly complex (random forest, neural networks, deep learning, …), it becomes more and more difficult to apply common sense or even understand exactly what a model is doing and why a given answer is produced.

magic_classifier = {}

# training

magic_classifier['Dog'] = 'Animal'

magic_classifier['Bob'] = 'Person'

magic_classifier['Fish'] = 'Animal'Now use this classifier, on the training data it works really well

magic_classifier['Dog'] == 'Animal' # true, 1/1 so far!

magic_classifier['Bob'] == 'Person' # true, 2/2 still perfect!

magic_classifier['Fish'] == 'Animal' # true, 3/3, wow!On new data it doesn’t work at all, it doesn’t even execute.

magic_classifier['Octopus'] == 'Animal' # exception?! but it was working so well

magic_classifier['Dan'] == 'Person' # exception?! The above example appeared to be a perfect trainer for mapping names to animals or people, but it just memorized the inputs and reproduced them at the output and so didn’t actually learn anything, it just copied.

Validation

Relevant for each of the categories, but applied in a slightly different way depending on the group. The idea is two divide the dataset into groups called training and validation or ideally training, validation, and testing. The analysis is then

- developed on training

- iteratively validated on validation

- ultimately tested on testing

Concrete Example: Classifying Flowers

Here we return to the iris data set and try to automatically classify flowers

iris %>% sample_n(5) %>% kable(,digits=2)| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | |

|---|---|---|---|---|---|

| 11 | 5.4 | 3.7 | 1.5 | 0.2 | setosa |

| 105 | 6.5 | 3.0 | 5.8 | 2.2 | virginica |

| 10 | 4.9 | 3.1 | 1.5 | 0.1 | setosa |

| 101 | 6.3 | 3.3 | 6.0 | 2.5 | virginica |

| 72 | 6.1 | 2.8 | 4.0 | 1.3 | versicolor |

Dividing the data

We first decide on a split, in this case 60%, 30%, 10% for training, validation, and testing and randomly divide up the data.

div.iris<-iris %>%

mutate(

# generate a random number uniformally between 0 and 1

rand_value = runif(nrow(iris)),

# divide the data based on how high this number is into different groups

data_div = ifelse(rand_value<0.6,"Training",

ifelse(rand_value<0.9,"Validation",

"Testing")

)

) %>% select(-rand_value) # we don't need this anymore

div.iris %>% sample_n(4) %>% kable(digits=2)| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | data_div | |

|---|---|---|---|---|---|---|

| 6 | 5.4 | 3.9 | 1.7 | 0.4 | setosa | Training |

| 86 | 6.0 | 3.4 | 4.5 | 1.6 | versicolor | Validation |

| 122 | 5.6 | 2.8 | 4.9 | 2.0 | virginica | Training |

| 142 | 6.9 | 3.1 | 5.1 | 2.3 | virginica | Training |

ggplot(div.iris,aes(Sepal.Length,Sepal.Width))+

geom_point(aes(shape=data_div,color=Species),size=2)+

labs(shape="Type")+

facet_grid(~data_div)+

coord_equal()+

theme_bw(10)Using a simple decision tree

Making a decision tree can be done by providing the output (class) as a function of the input, in this case just a combination of x1 and y1 (~x1+y1). From this a

library(rpart)

library(rpart.plot)

training.data <- div.iris %>% subset(data_div == "Training")

dec.tree<-rpart(Species~Sepal.Length+Sepal.Width,data=iris)A tree can be visualized graphically as a trunk (the top most node) dividing progressively into smaller subnodes

or as a list of rules to applyprint(dec.tree)## n= 150

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 150 100 setosa (0.33333333 0.33333333 0.33333333)

## 2) Sepal.Length< 5.45 52 7 setosa (0.86538462 0.11538462 0.01923077)

## 4) Sepal.Width>=2.8 45 1 setosa (0.97777778 0.02222222 0.00000000) *

## 5) Sepal.Width< 2.8 7 2 versicolor (0.14285714 0.71428571 0.14285714) *

## 3) Sepal.Length>=5.45 98 49 virginica (0.05102041 0.44897959 0.50000000)

## 6) Sepal.Length< 6.15 43 15 versicolor (0.11627907 0.65116279 0.23255814)

## 12) Sepal.Width>=3.1 7 2 setosa (0.71428571 0.28571429 0.00000000) *

## 13) Sepal.Width< 3.1 36 10 versicolor (0.00000000 0.72222222 0.27777778) *

## 7) Sepal.Length>=6.15 55 16 virginica (0.00000000 0.29090909 0.70909091) *

Overlaying with the prediction data looks good

match_range<-function(ivec,n_length=10) seq(from=min(ivec),to=max(ivec),length=n_length)

pred.map<-expand.grid(Sepal.Length = match_range(training.data$Sepal.Length),

Sepal.Width = match_range(training.data$Sepal.Width))

pred.map$pred_class<-predict(dec.tree,pred.map,type="class")

training.data %>%

mutate(pred_class=predict(dec.tree,training.data,type="class"),

class_result=ifelse(as.character(pred_class)==as.character(Species),"Correct","Incorrect")

)->training.data

ggplot(pred.map,aes(Sepal.Length,Sepal.Width))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=training.data,aes(color=Species,size=class_result))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)It struggles more with the validation data since it has never seen it before and it’s not quite the same as the training

valid.data<-div.iris %>% subset(data_div == "Validation")

valid.data %>%

mutate(pred_class=predict(dec.tree,valid.data,type="class"),

class_result=ifelse(as.character(pred_class)==as.character(Species),"Correct","Incorrect")

)->valid.data

ggplot(pred.map,aes(Sepal.Length,Sepal.Width))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=valid.data,aes(color=Species,size=class_result))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)The test data (normally we would not look at it at all right now and wait until the very end) looks even worse and an even smaller fraction is correctly matched.

valid.data<-div.iris %>% subset(data_div == "Testing")

valid.data %>%

mutate(pred_class=predict(dec.tree,valid.data,type="class"),

class_result=ifelse(as.character(pred_class)==as.character(Species),"Correct","Incorrect")

)->valid.data

ggplot(pred.map,aes(Sepal.Length,Sepal.Width))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=valid.data,aes(color=Species,size=class_result))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)Tricky Concrete Example: Classification

Taking a list of points (feature vectors) where each has an x1 and a y1 coordinate and a classification (Happy or Sad), we can show the data as a table

spiral.pts <- expand.grid(x = -50:50, y = -50:50) %>%

subset((x==0) | (y==0)) %>%

mutate(

r = sqrt(x^2+y^2),

th = r/60*2*pi,

x1 = cos(th)*x-sin(th)*y,

y1 = sin(th)*x+cos(th)*y,

class = ifelse(x==0,"Happy","Sad")

) %>%

select(x1,y1,class)

kable(spiral.pts %>% sample_n(5),digits=2)| x1 | y1 | class | |

|---|---|---|---|

| 137 | -29.12 | -21.16 | Sad |

| 18 | -10.20 | 31.38 | Happy |

| 144 | -8.94 | -42.06 | Sad |

| 88 | -2.70 | -12.72 | Sad |

| 66 | 30.31 | 17.50 | Sad |

Or graphically

You can play around with neural networks and this data set at TensorFlow Playground

Dividing the data

We first decide on a split, in this case 60%, 30%, 10% for training, validation, and testing and randomly divide up the data.

div.spiral.pts<-spiral.pts %>%

mutate(

# generate a random number uniformally between 0 and 1

rand_value = runif(nrow(spiral.pts)),

# divide the data based on how high this number is into different groups

data_div = ifelse(rand_value<0.6,"Training",

ifelse(rand_value<0.9,"Validation",

"Testing")

)

) %>% select(-rand_value) # we don't need this anymore

div.spiral.pts %>% sample_n(4) %>% kable(digits=2)| x1 | y1 | class | data_div | |

|---|---|---|---|---|

| 75 | 23.75 | -10.58 | Sad | Training |

| 94 | -5.20 | -4.68 | Sad | Validation |

| 125 | -19.42 | 14.11 | Sad | Training |

| 63 | 25.43 | 28.24 | Sad | Training |

ggplot(div.spiral.pts,aes(x1,y1))+

geom_point(aes(shape=data_div,color=class),size=2)+

labs(shape="Type")+

facet_wrap(~data_div)+

coord_equal()+

theme_bw(20)Using a simple decision tree

Making a decision tree can be done by providing the output (class) as a function of the input, in this case just a combination of x1 and y1 (~x1+y1). From this a

library(rpart)

library(rpart.plot)

training.data <- div.spiral.pts %>% subset(data_div == "Training")

dec.tree<-rpart(class~x1+y1,data=training.data)A tree can be visualized graphically as a trunk (the top most node) dividing progressively into smaller subnodes

or as a list of rules to applyprint(dec.tree)## n= 119

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 119 56 Sad (0.47058824 0.52941176)

## 2) x1< -33.01294 8 0 Happy (1.00000000 0.00000000) *

## 3) x1>=-33.01294 111 48 Sad (0.43243243 0.56756757)

## 6) x1>=-18.4582 94 47 Happy (0.50000000 0.50000000)

## 12) y1< 6.003109 66 28 Happy (0.57575758 0.42424242)

## 24) y1>=-5.691917 32 8 Happy (0.75000000 0.25000000) *

## 25) y1< -5.691917 34 14 Sad (0.41176471 0.58823529)

## 50) y1< -18.36846 16 6 Happy (0.62500000 0.37500000) *

## 51) y1>=-18.36846 18 4 Sad (0.22222222 0.77777778) *

## 13) y1>=6.003109 28 9 Sad (0.32142857 0.67857143)

## 26) y1>=24.87892 12 5 Happy (0.58333333 0.41666667) *

## 27) y1< 24.87892 16 2 Sad (0.12500000 0.87500000) *

## 7) x1< -18.4582 17 1 Sad (0.05882353 0.94117647) *

Overlaying with the prediction data looks good

pred.map<-expand.grid(x1 = -50:50, y1 = -50:50)

pred.map$pred_class<-ifelse(predict(dec.tree,pred.map)[,1]>0.5,"Happy","Sad")

training.data$pred_class<-ifelse(predict(dec.tree,training.data)[,1]>0.5,"Happy","Sad")

ggplot(pred.map,aes(x1,y1))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=training.data,aes(color=class,size=(pred_class!=class)))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)It struggles more with the validation data since it has never seen it before and it’s not quite the same as the training

valid.data<-div.spiral.pts %>% subset(data_div == "Validation")

valid.data$pred_class<-ifelse(predict(dec.tree,valid.data)[,1]>0.5,"Happy","Sad")

ggplot(pred.map,aes(x1,y1))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=valid.data,aes(color=class,size=(pred_class!=class)))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)The test data (normally we would not look at it at all right now and wait until the very end) looks even worse and an even smaller fraction is correctly matched.

valid.data<-div.spiral.pts %>% subset(data_div == "Testing")

valid.data$pred_class<-ifelse(predict(dec.tree,valid.data)[,1]>0.5,"Happy","Sad")

ggplot(pred.map,aes(x1,y1))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=valid.data,aes(color=class,size=(pred_class!=class)))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)We can choose to make more complicated trees by changing the function to something more detailed like

class = x1 + y1 + x12 + y12 + sin(x1/5)+sin(y1/5)

pred.map$pred_class<-ifelse(predict(dec.tree,pred.map)[,1]>0.5,"Happy","Sad")

training.data$pred_class<-ifelse(predict(dec.tree,training.data)[,1]>0.5,"Happy","Sad")

ggplot(pred.map,aes(x1,y1))+

geom_tile(aes(fill=pred_class),alpha=0.5)+

geom_point(data=training.data,aes(color=class,size=(pred_class!=class)))+

labs(fill="Predicted",size = "Incorrectly\nLabeled")+

theme_bw(20)Parameters

library(igraph)

make.im.proc.chain<-function(root.node="Raw\nImages",filters=c(),filter.parms=c(),

segmentation=c(),segmentation.parms=c(),

analysis=c(),analysis.parms=c()) {

node.names<-c("Raw\nImages",

filter.parms,filters,

segmentation.parms,segmentation,

analysis.parms,analysis

)

c.mat<-matrix(0,length(node.names),length(node.names))

colnames(c.mat)<-node.names

rownames(c.mat)<-node.names

for(cFilt in filters) {

c.mat["Raw\nImages",cFilt]<-1

for(cParm in filter.parms) c.mat[cParm,cFilt]<-1

for(cSeg in segmentation) {

c.mat[cFilt,cSeg]<-1

for(cParm in segmentation.parms) c.mat[cParm,cSeg]<-1

for(cAnal in analysis) {

c.mat[cSeg,cAnal]<-1

for(cParm in analysis.parms) c.mat[cParm,cAnal]<-1

}

}

}

g<-graph.adjacency(c.mat,mode="directed")

V(g)$degree <- degree(g)

V(g)$label <- V(g)$name

V(g)$color <- "lightblue"

V(g)["Raw\nImages"]$color<-"lightgreen"

for(cAnal in analysis) V(g)[cAnal]$color<-"pink"

V(g)$size<-30

for(cParam in c(filter.parms,segmentation.parms,analysis.parms)) {

V(g)[cParam]$color<-"grey"

V(g)[cParam]$size<-25

}

E(g)$width<-2

g

}g<-make.im.proc.chain(filters=c("Gaussian\nFilter"),

filter.parms=c("3x3\nNeighbors","0.5 Sigma"),

segmentation=c("Threshold"),

segmentation.parms=c("100"),

analysis=c("Shape\nAnalysis","Thickness\nAnalysis")

)

plot(g)#,layout=layout.circle) #, layout=layout.circle)# layout.fruchterman.reingold)# layout.kamada.kawai) - Green are the images we start with (measurements)

- Blue are processing steps

- Gray are use input parameters

- Pink are the outputs

The Full Chain

library(igraph)

g<-make.im.proc.chain(filters=c("Gaussian\nFilter","Median\nFilter","Diffusion\nFilter","No\nFilter",

"Laplacian\nFilter"),

segmentation=c("Threshold","Hysteresis\nThreshold","Automated"),

analysis=c("Shape\nAnalysis","Thickness\nAnalysis","Distribution\nAnalysis",

"Skeleton\nAnalysis","2 Point\nCorr","Curvature")

)

plot(g,layout=layout.reingold.tilford) #, layout=layout.circle)# layout.fruchterman.reingold)# layout.kamada.kawai) The Full Chain (with Parameters)

g<-make.im.proc.chain(filters=c("Gaussian\nFilter","Median\nFilter","Diffusion\nFilter"),

filter.parms=c("3x3\nNeighbors","5x5\nNeighbors","7x7\nNeighbors",

"0.5 Sigma","1.0 Sigma","1.2 Sigma"),

segmentation=c("Threshold","Hysteresis\nThreshold","Automated"),

segmentation.parms=paste(seq(90,110,length.out=3)),

analysis=c("Shape\nAnalysis","Thickness\nAnalysis","Distribution\nAnalysis","Skeleton\nAnalysis","2 Point\nCorr")

)

plot(g,layout=layout.lgl(g,maxiter=10000,root=1)) #, layout=layout.circle)# layout.fruchterman.reingold)# layout.kamada.kawai) - A mess, over 1080 combinations for just one sample (not even exploring a very large range of threshold values)

- To calculate this for even one sample can take days (weeks, years)

- 512 x 512 x 512 foam sample → 12 weeks of processing time

- 1024 x 1024 x 1024 femur bone → 1.9 years

- Not all samples are the same

- Once the analysis is run we have a ton of data

- femur bone → 60 million shapes analyzed

- What do we even want?

- How do we judge the different results?

Qualitative vs Quantitative

Given the complexity of the tree, we need to do some pruning

Qualitative Assessment

- Evaluating metrics using visual feedback

- Compare with expectations from other independent techniques or approach

- Are there artifacts which are included in the output?

- Do the shapes look correct?

- Are they distributed as expected?

- Is their orientation meaningful?

title.fun<-function(file.name) ""

show.pngs.as.grid(Sys.glob("ext-figures/poros*.png"),title.fun,zoom=0.5)Quantitative Metrics

With a quantitative approach, we can calculate the specific shape or distribution metrics on the sample with each parameter and establish the relationship between parameter and metric.

Parameter Sweep

The way we do this is usually a parameter sweep which means taking one (or more) parameters and varying them between the reasonable bounds (judged qualitatively).

source('../common/shapeAnalysisProcess.R')

source('../common/commonReportFunctions.R')

# read and correct the coordinate system

thresh.fun<-function(x) {

pth<-rev(strsplit(x,"/")[[1]])[2]

t<-strsplit(pth,"_")[[1]][3]

as.numeric(substring(t,2,nchar(t)))

}

readfcn<-function(x) cbind(compare.foam.corrected(x,

checkProj=F

#force.scale=0.011 # force voxel size to be 11um

),

thresh=thresh.fun(x) # how to parse the sample names

)

# Where are the csv files located

rootDir<-"../common/data/mcastudy"

clpor.files<-Sys.glob(paste(rootDir,"/a*/lacun_0.csv",sep="/")) # list all of the files

# Read in all of the files

all.lacun<-ldply(clpor.files,readfcn,.parallel=T) ggplot(all.lacun,aes(y=VOLUME*1e9,x=thresh))+

geom_jitter(alpha=0.1)+geom_smooth()+

theme_bw(24)+labs(y="Volume (um3)",x="Threshold Value",color="Threshold")+ylim(0,1000)Is it always the same?

ggplot(subset(all.lacun,thresh %% 1000==0),aes(y=VOLUME*1e9,x=as.factor(thresh)))+

geom_violin()+

theme_bw(24)+labs(y="Volume (um3)",x="Threshold Value",color="Threshold")+ylim(0,1000) ggplot(all.lacun,aes(y=PCA1_Z,x=thresh))+

geom_jitter(alpha=0.1)+geom_smooth()+

theme_bw(24)+labs(y="Orientation",x="Threshold Value",color="Threshold")Sensitivity

Sensitivity is defined in control system theory as the change in the value of an output against the change in the input.

$$ S = \frac{|\Delta \textrm{Metric}|}{|\Delta \textrm{Parameter}|} $$

Such a strict definition is not particularly useful for image processing since a threshold has a unit of intensity and a metric might be volume which has m3 so the sensitivity becomes volume per intensity.

Practical Sensitivity

A more common approach is to estimate the variation in this parameter between images or within a single image (automatic threshold methods can be useful for this) and define the sensitivity based on this variation. It is also common to normalize it with the mean value so the result is a percentage.

$$ S = \frac{max(\textrm{Metric})-min(\textrm{Metric})}{avg(\textrm{Metric})} $$

Sensitivity: Real Measurements

In this graph it is magnitude of the slope. The steeper the slope the more the metric changes given a small change in the parameter

poresum<-function(all.data) ddply(all.data,.(thresh),function(c.sample) {

data.frame(Count=nrow(c.sample),

Volume=mean(c.sample$VOLUME*1e9),

Stretch=mean(c.sample$AISO),

Oblateness=mean(c.sample$OBLATENESS),

#Lacuna_Density_mm=1/mean(c.sample$DENSITY_CNT),

Length=mean(c.sample$PROJ_PCA1*1000),

Width=mean(c.sample$PROJ_PCA2*1000),

Height=mean(c.sample$PROJ_PCA3*1000),

Orientation=mean(abs(c.sample$PCA1_Z)))

})

comb.summary<-cbind(poresum(all.lacun),Phase="Lacuna")

splot<-ggplot(comb.summary,aes(x=thresh))

splot+geom_line(aes(y=Count))+geom_point(aes(y=Count))+scale_y_log10()+

theme_bw(24)+labs(y="Object Count",x="Threshold",color="Phase")Comparing Different Variables we see that the best (lowest) value for the count sensitivity is the highest for the volume and anisotropy.

calc.sens<-function(in.df) {

data.frame(sens.cnt=100*with(in.df,(max(Count)-min(Count))/mean(Count)),

sens.vol=100*with(in.df,(max(Volume)-min(Volume))/mean(Volume)),

sens.stretch=100*with(in.df,(max(Stretch)-min(Stretch))/mean(Stretch))

)

}

sens.summary<-ddply.cutcols(comb.summary,.(cut_interval(thresh,5)),calc.sens)

ggplot(sens.summary,aes(x=thresh))+

geom_line(aes(y=sens.cnt,color="Count"))+

geom_line(aes(y=sens.vol,color="Volume"))+

geom_line(aes(y=sens.stretch,color="Anisotropy"))+

labs(x="Threshold",y="Sensitivity (%)",color="Metric")+

theme_bw(20)Which metric is more important?

Unit Testing

In computer programming, unit testing is a method by which individual units of source code, sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures, are tested to determine if they are fit for use.

- Intuitively, one can view a unit as the smallest testable part of an application

- Unit testing is possible with every language

- Most (Java, C++, Matlab, R, Python) have built in support for automated testing and reporting

The first requirement for unit testing to work well is to have you tools divided up into small independent parts (functions)

- Each part can then be tested independently (unit testing)

- If the tests are well done, units can be changed and tested independently

- Makes upgrading or expanding tools easy

- The entire path can be tested (integration testing)

- Catches mistakes in integration or glue

Ideally with realistic but simulated test data

- The utility of the testing is only as good as the tests you make

Example

- Given the following function

function vxCnt=countVoxs(inImage)

- We can right the following tests

- testEmpty2d

assert countVoxs(zeros(3,3)) == 0

- testEmpty3d

assert countVoxs(zeros(3,3,3)) == 0

- testDiag3d

assert countVoxs(eye(3)) == 3

Unit Testing: Examples

- Given the following function

function shapeTable=shapeAnalysis(inImage)We should decompose the task into sub-components function clImage=componentLabel(inImage)function objInfo=analyzeObject(inObject)function vxCnt=countVoxs(inObject)function covMat=calculateCOV(inObject)function shapeT=calcShapeT(covMat)function angle=calcOrientation(shapeT)function aniso=calcAnisotropy(shapeT)

Unit Testing in ImageJ

Unit Testing in KNIME

Read more and Here The Java-based unit-testing can be used (JUnit) before any of the plugins are compiled, additionally entire workflows can be made to test the objects using special testing nodes like

- difference node (check the if two values are different)

- disturber node (insert random / missing values to determine fault tolerance)

Test Driven Programming

Test Driven programming is a style or approach to programming where the tests are written before the functional code. Like very concrete specifications. It is easy to estimate how much time is left since you can automatically see how many of the tests have been passed. You and your collaborators are clear on the utility of the system.

- shapeAnalysis must give an anisotropy of 0 when we input a sphere

- shapeAnalysis must give the center of volume within 0.5 pixels

- shapeAnalysis must run on a 1000x1000 image in 30 seconds

Visualization

One of the biggest problems with big sciences is trying to visualize a lot of heterogeneous data.

- Tables are difficult to interpret

- 3D Visualizations are very difficult to compare visually

- Contradictory necessity of simple single value results and all of the data to look for trends and find problems

Bad Graphs

There are too many graphs which say

- ‘my data is very complicated’

- ‘I know how to use __ toolbox in Matlab/R/Mathematica’

- Most programs by default make poor plots

- Good visualizations takes time

Key Ideas

- What is my message?

- Does the graphic communicate it clearly?

- Is a graphic representation really necessary?

- Does every line / color serve a purpose?

- Pretend ink is very expensive

Simple Rules

- Never use 3D graphics when it can be avoided (unless you want to be deliberately misleading), our visual system is not well suited for comparing heights of different

- Pie charts can also be hard to interpret

- Background color should almost always be white (not light gray)

- Use color palettes adapted to human visual sensitivity

What is my message

- Plots to “show the results” or “get a feeling” are usually not good

- Focus on a single, simple message

- X is a little bit correlated with Y

Does my graphic communicate it clearly?

- Too much data makes it very difficult to derive a clear message

- Filter and reduce information until it is extremely simple

ggplot(test.data,aes(x,y))+

stat_binhex(bins=20)+

geom_smooth(method="lm",aes(color="Fit"))+

coord_equal()+

theme_bw(20)+guides(color=F)ggplot(test.data,aes(x,y))+

geom_density2d(aes(color="Contour"))+

geom_smooth(method="lm",aes(color="Linear Fit"))+

coord_equal()+

labs(color="Type")+

theme_bw(20)Grammar of Graphics

- What is a grammar?

- Set of rules for constructing and validating a sentence

- Specifies the relationship and order between the words constituting the sentence

- How does this apply to graphics?

If we develop a consistent way of expressing graphics (sentences) in terms of elements (words) we can compose and decompose graphics easily

The most important modern work in graphical grammars is “The Grammar of Graphics” by Wilkinson, Anand, and Grossman (2005). This work built on earlier work by Bertin (1983) and proposed a grammar that can be used to describe and construct a wide range of statistical graphics.

This can be applied in R using the ggplot2 library (H. Wickham. ggplot2: elegant graphics for data analysis. Springer New York, 2009.)

Grammar Explained

Normally we think of plots in terms of some sort of data which is fed into a plot command that produces a picture

- In Excel you select a range and plot-type and click “Make”

- In Matlab you run

plot(xdata,ydata,color/shape)

- These produces entire graphics (sentences) or at least phrases in one go and thus abstract away from the idea of grammar.

- If you spoke by finding entire sentences in a book it would be very ineffective, it is much better to build up word by word

Grammar

Separate the graph into its component parts

- Data Mapping

- var1 → x, var2 → y

- Points

- Axes / Coordinate System

- Labels / Annotation

Construct graphics by focusing on each portion independently.

Wrapping up

- I am not a statistician

- This is not a statistics course

- If you have questions or concerns, Both ETHZ and Uni Zurich offer free consultation with real statisticians

They are rarely bearers of good news

Simulations (even simple ones) are very helpful (see StatisticalSignificanceHunter)

- Try and understand the tests you are performing