This is a walkthrough of a simple 3-analysis example pipeline.

The goal of the pipeline is to multiply two long numbers. We pretend that it cannot be done in one operation on a single machine. So we decide to split the task into subtasks of multiplying the first long number by individual digits of the second long number for the sake of an example. At the last step the partial products are shifted and added together to yield the final product.

|

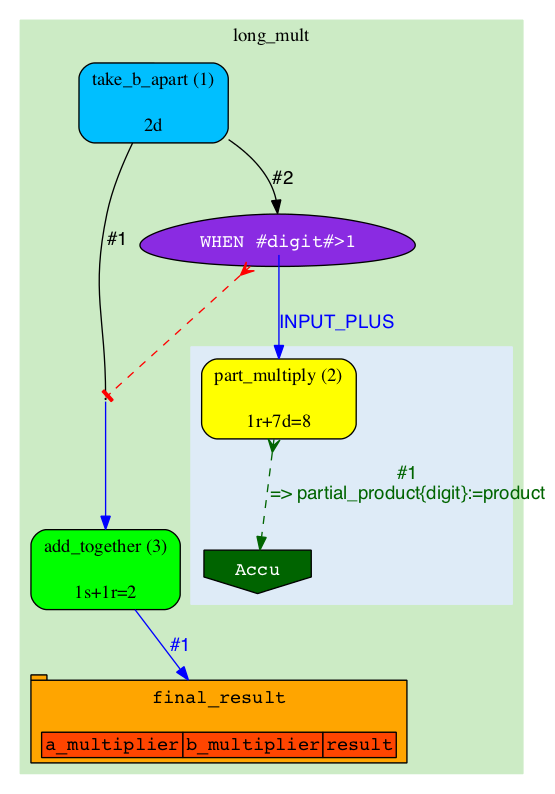

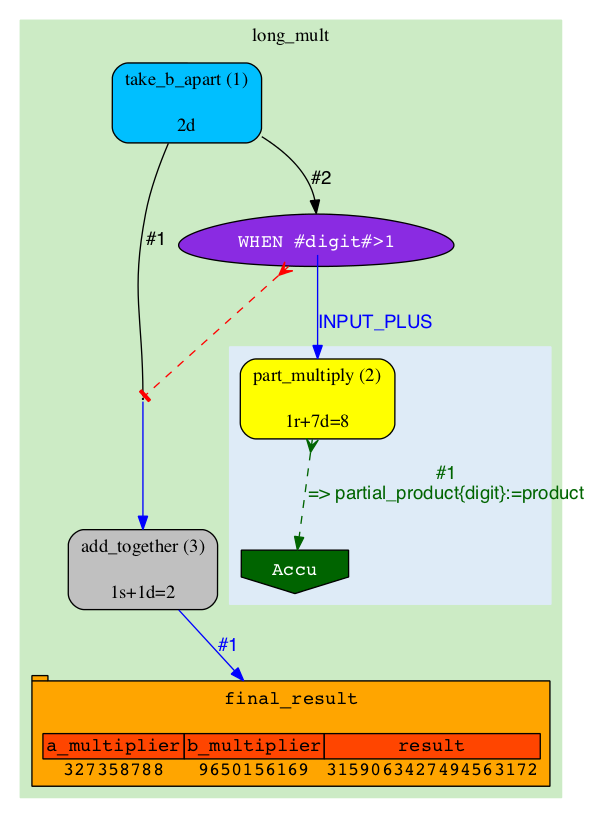

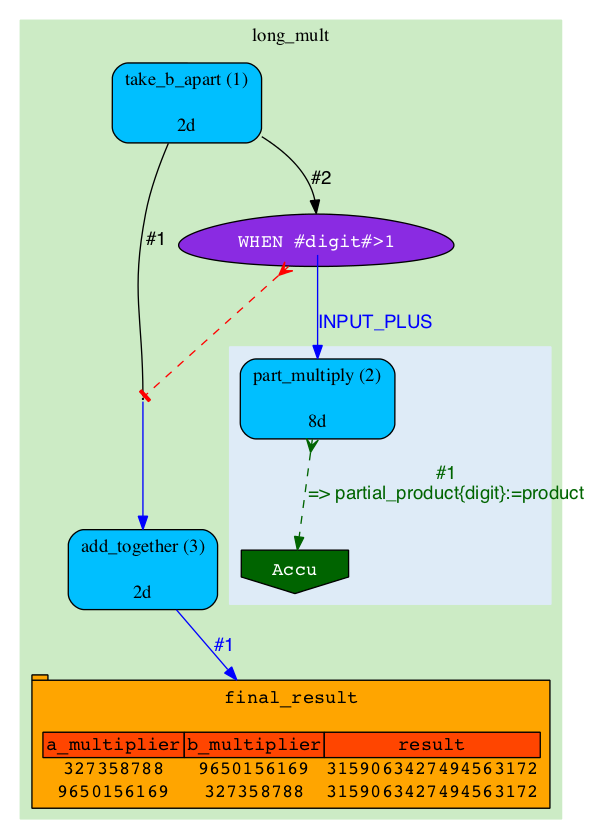

A J-diagram is a directed acyclic graph where nodes represent Jobs, Semaphores or Accumulators with edges representing relationships and dependencies. Most of these objects are created dynamically during the pipeline execution, so here you'll see a lot of action - the J-diagram will be growing. J-diagrams can be generated at any moment during a pipeline's execution by running Hive's visualize_jobs.pl script (new in version/2.5) . |

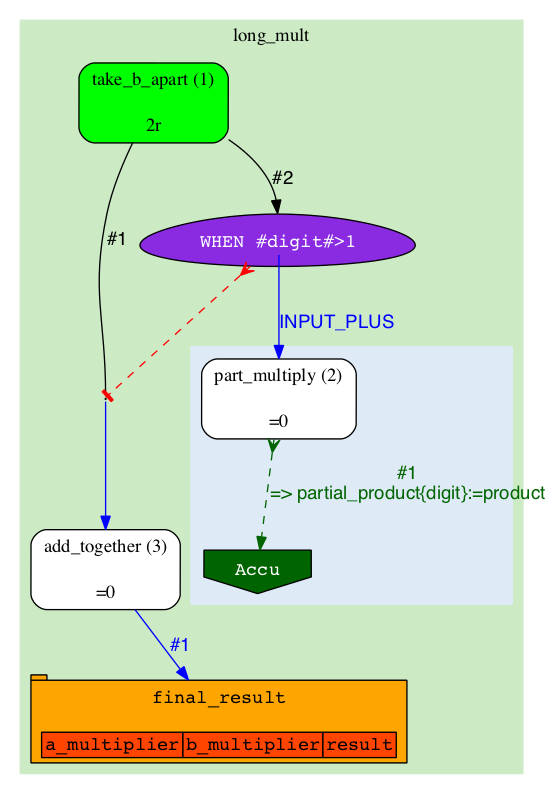

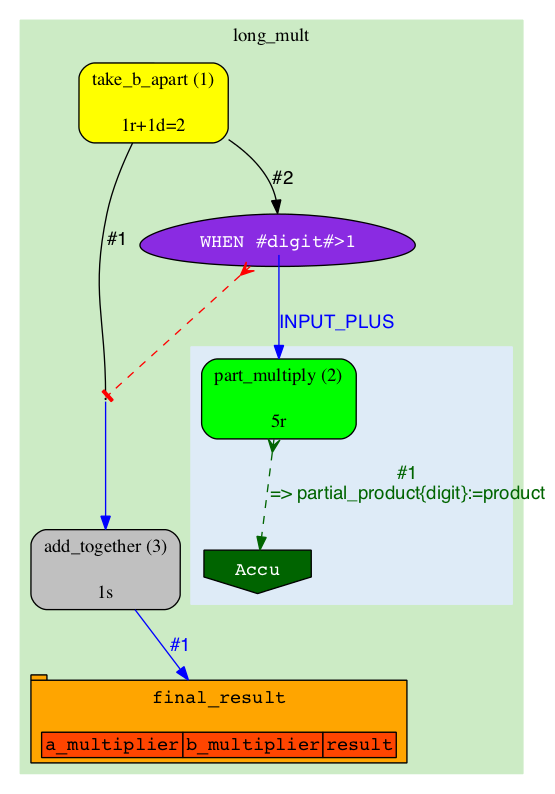

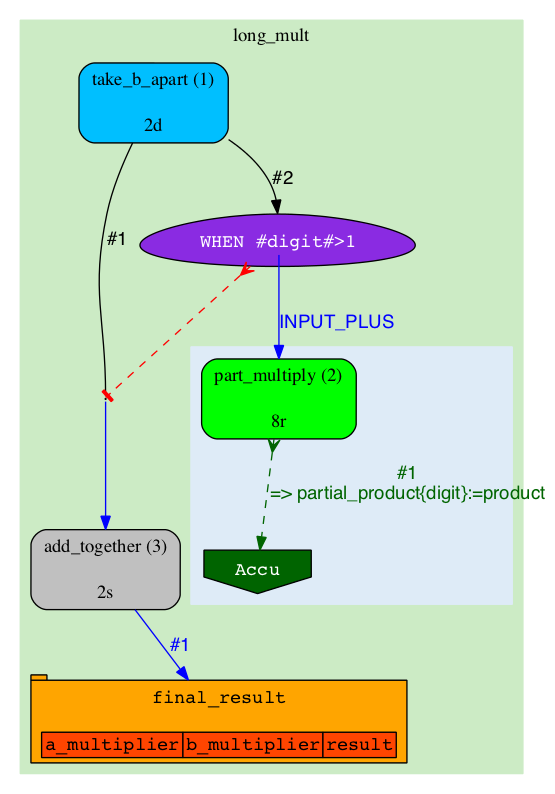

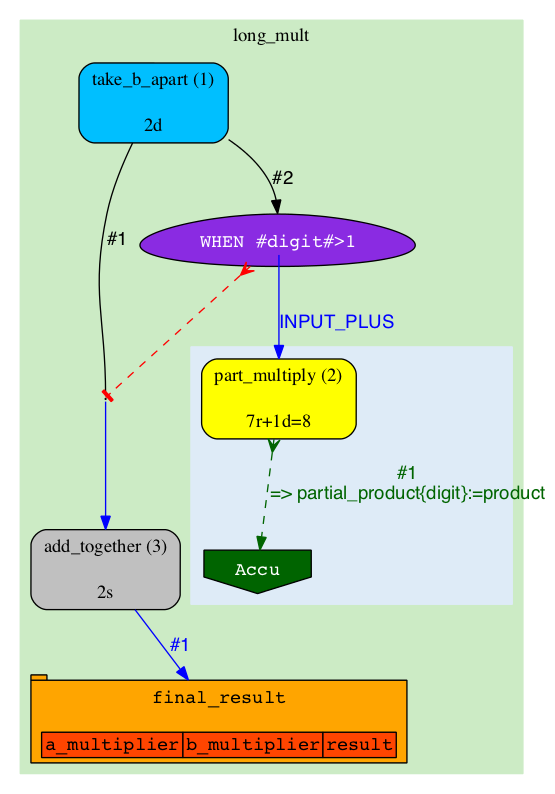

An A-diagram is a directed graph where most of the nodes represent Analyses and edges represent Rules. As a whole it represents the structure of the pipeline which is normally static. The only changing elements will be job counts and analysis colours. A-diagrams can be generated at any moment during a pipeline's execution by running Hive's generate_graph.pl script. |

The main bulk of this document is a commented series of snapshots of both types of diagrams during the execution of the pipeline.

They can be approximately reproduced by running a sequence of commands, similar to these, in a terminal:

export PIPELINE_URL=sqlite:///lg4_long_mult.sqlite # An SQLite file is enough to handle this pipeline

init_pipeline.pl Bio::EnsEMBL::Hive::Examples::LongMult::PipeConfig::LongMult_conf -pipeline_url $PIPELINE_URL # Initialize the pipeline database from a PipeConfig file

runWorker.pl -url $PIPELINE_URL -job_id $JOB_ID # Run a specific job - this allows you to force your own order of execution. Run a few of these

beekeeper.pl -url $PIPELINE_URL -analyses_pattern $ANALYSIS_NAME -sync # Force the system to recalculate job counts and determine states of analyses

visualize_jobs.pl -url $PIPELINE_URL -out long_mult_jobs_${STEP_NUMBER}.png # To make a J-diagram snapshot (it is convenient to have synchronized numbering)

generate_graph.pl -url $PIPELINE_URL -out long_mult_analyses_${STEP_NUMBER}.png # To make an A-diagram snapshot (it is convenient to have synchronized numbering)

|

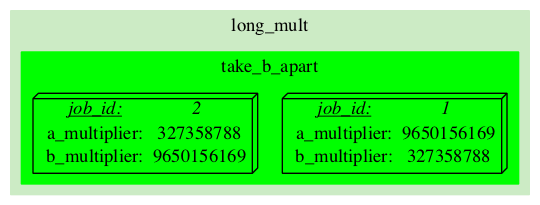

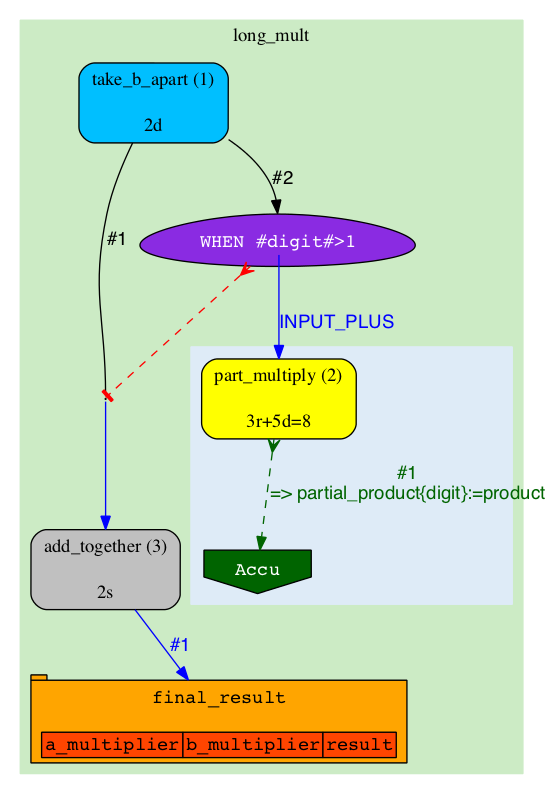

This is our pipeline just after the initialization:

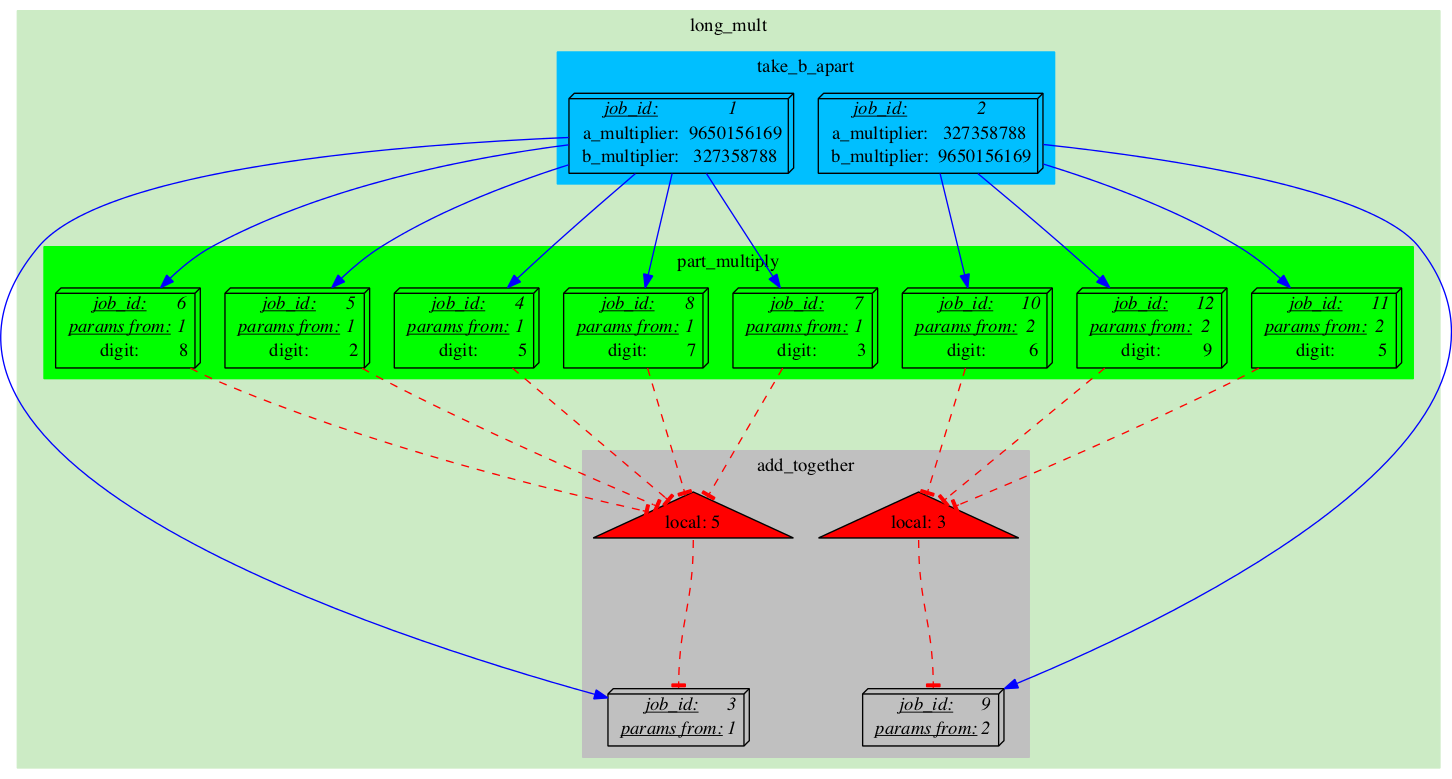

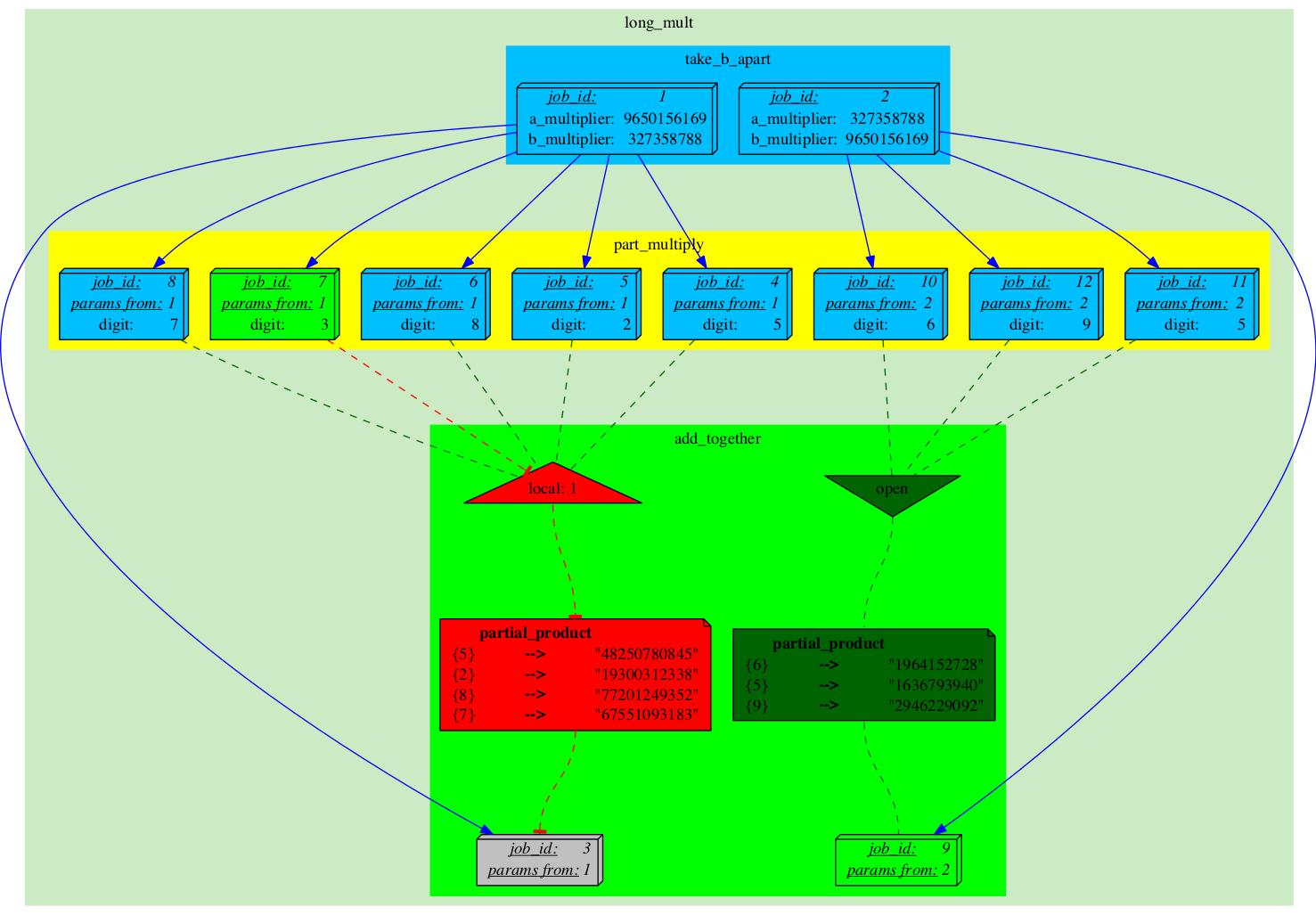

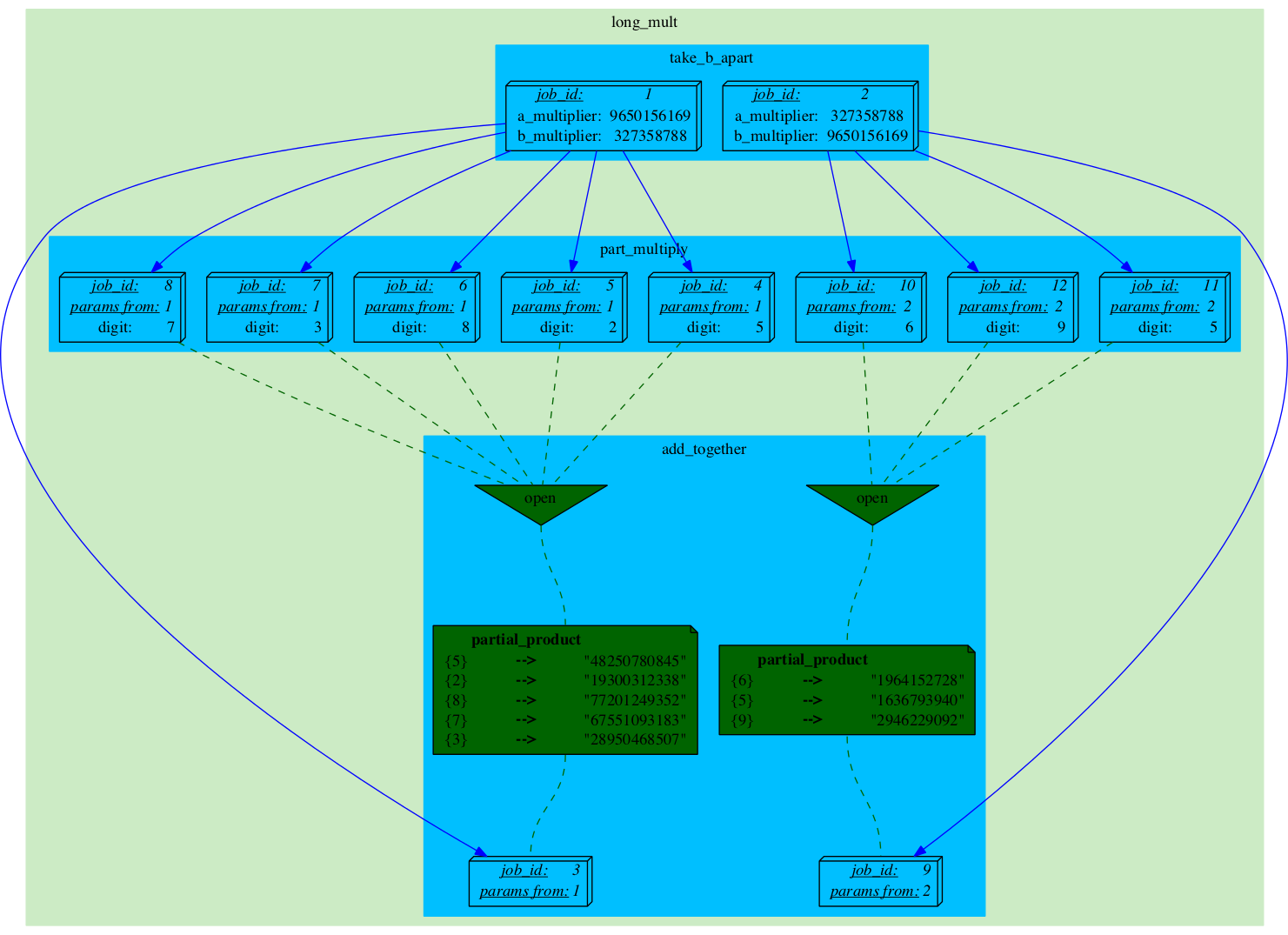

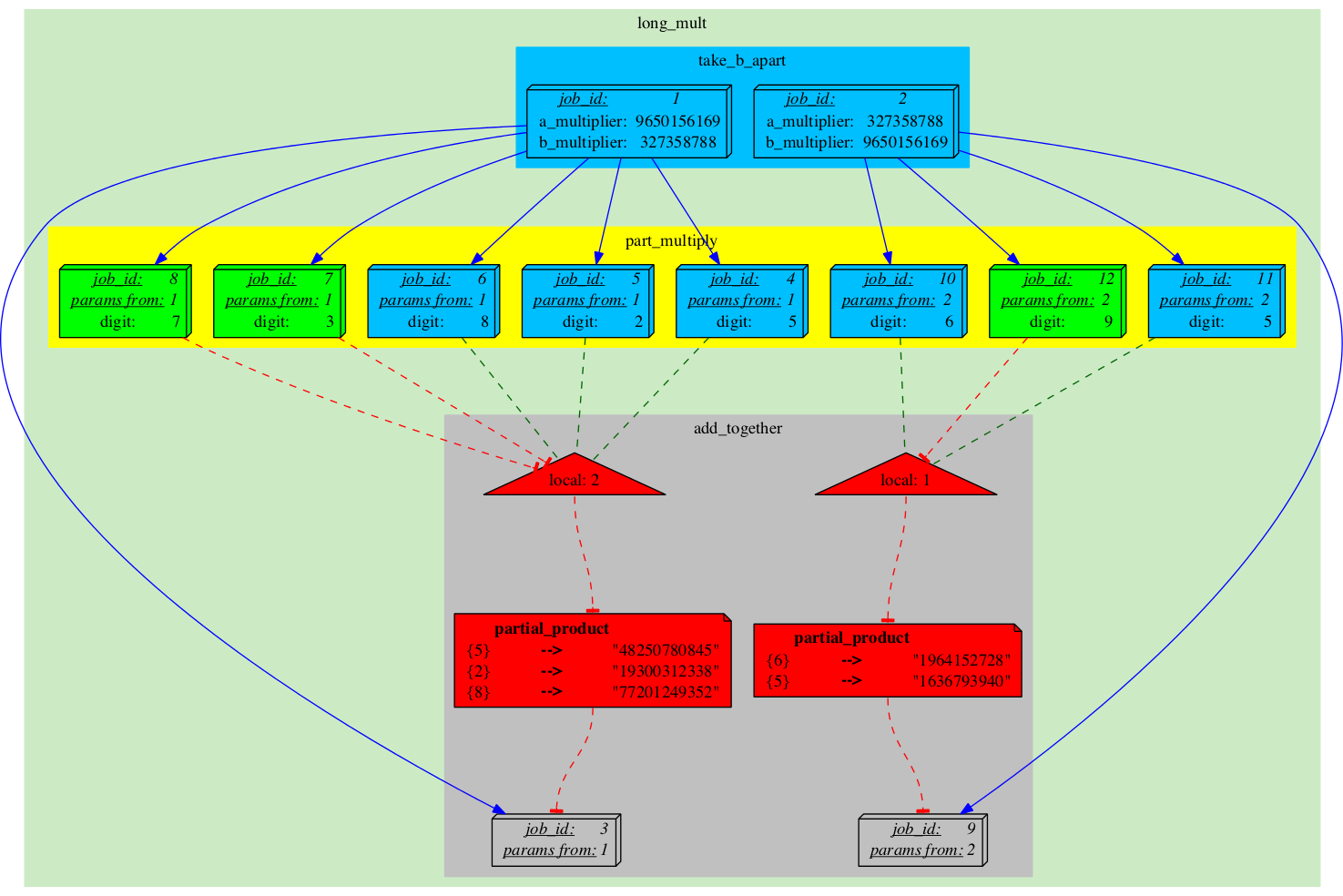

After running the first Job we see a lot of changes on the J-diagram:

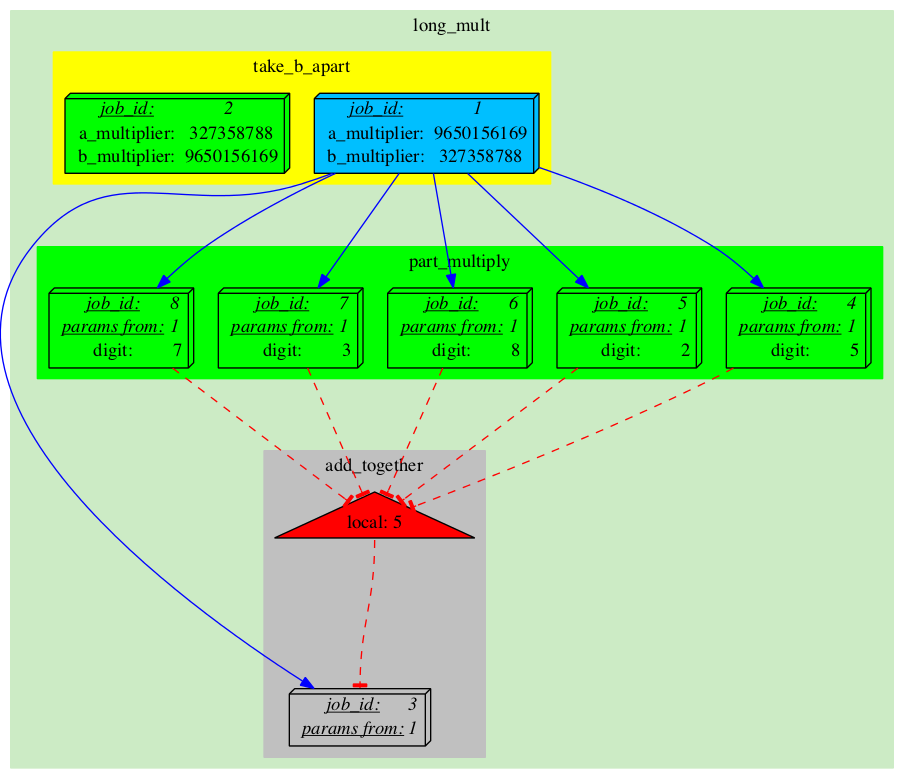

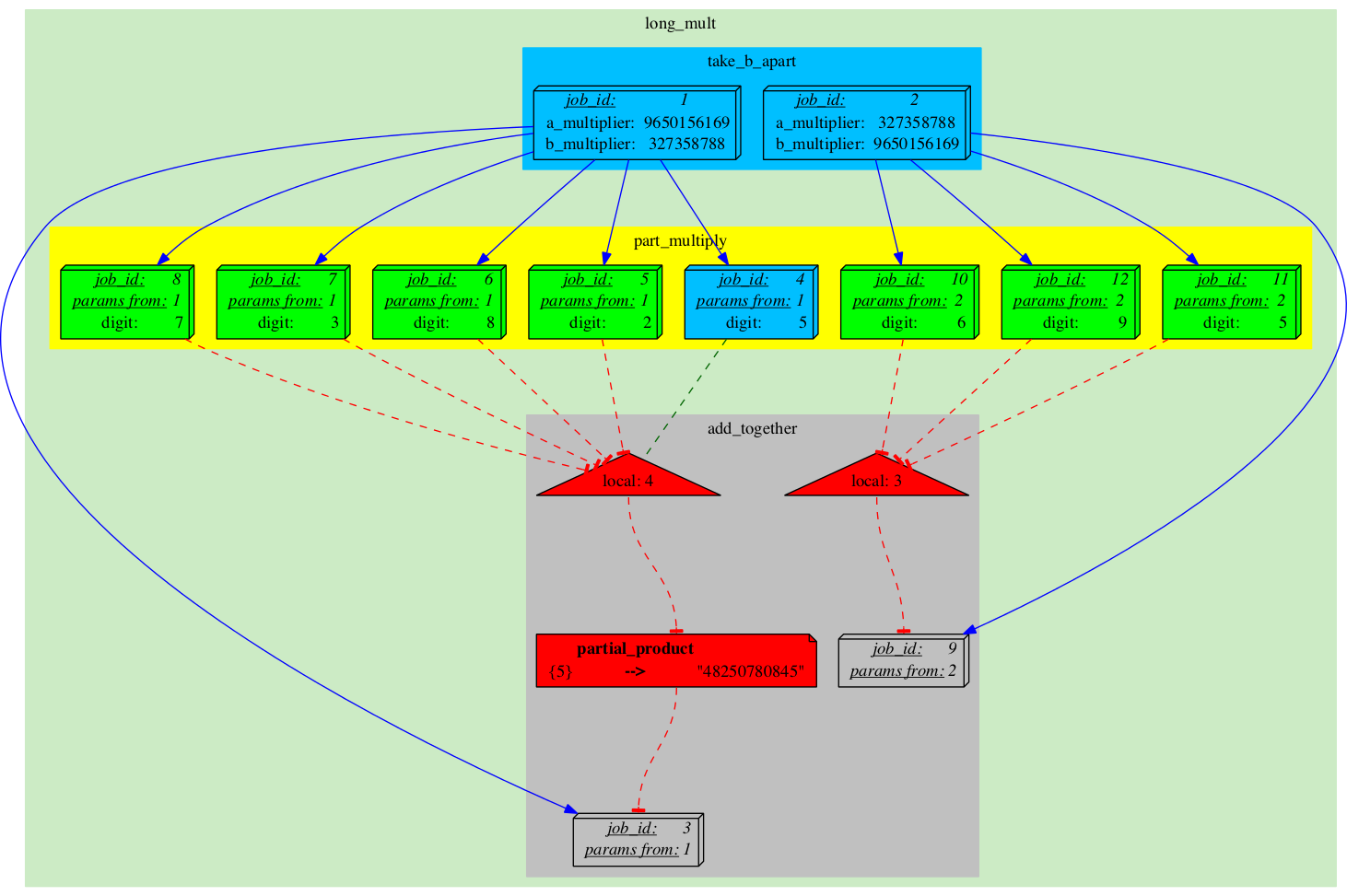

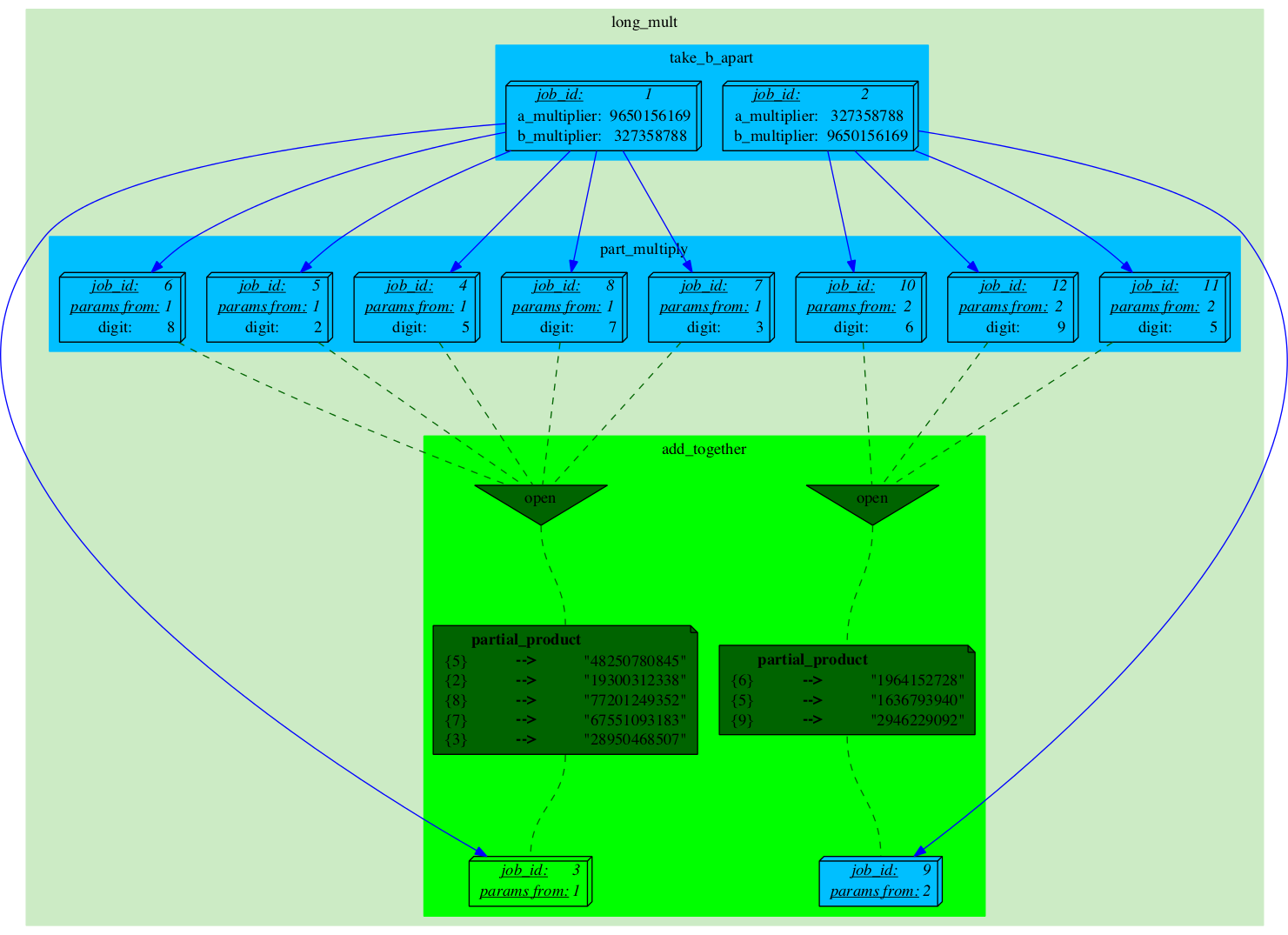

After running the second Job more jobs have been added to Analyses 'part_multiply' and 'add_together'.

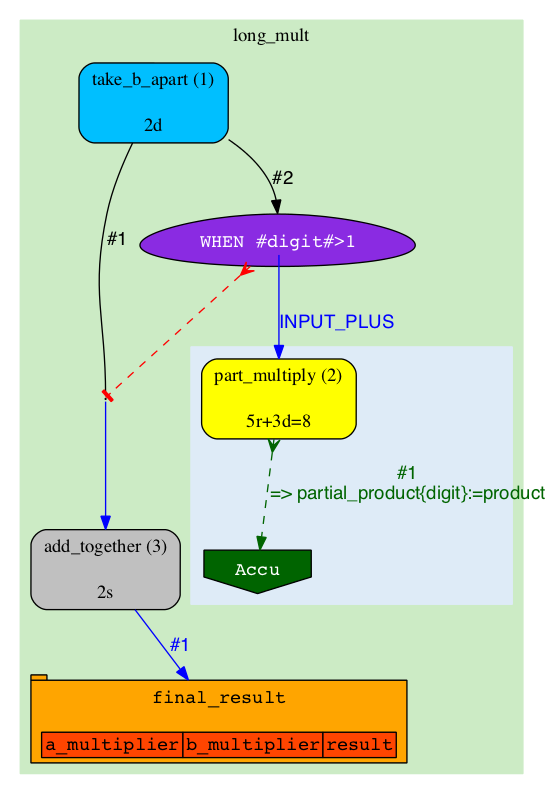

We finally get to run a Job from the second Analysis.

A couple more Jobs get executed with a similar effect

|

|

|

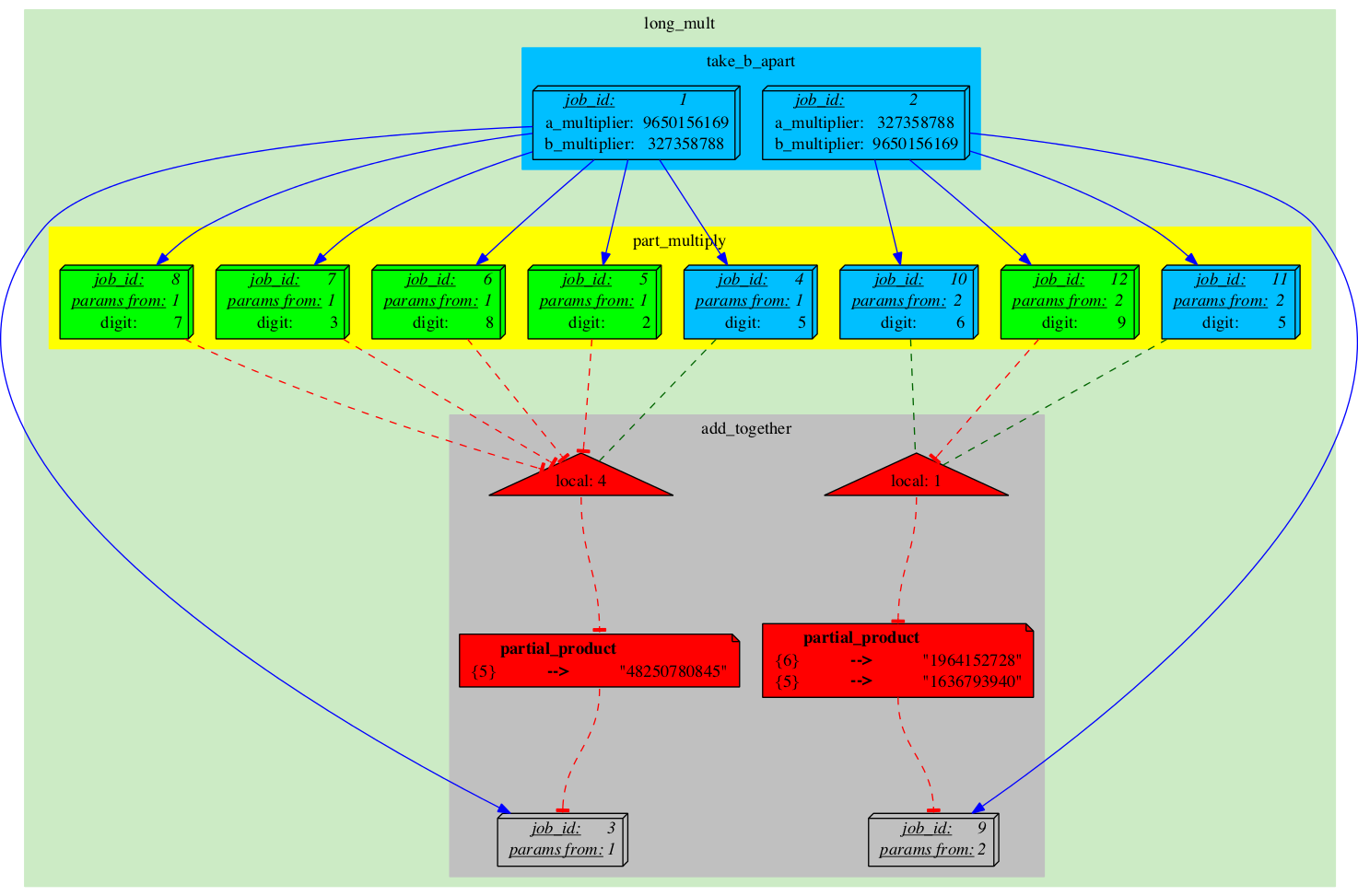

After executing these two jobs:

|

And another couple more Jobs...

|

|

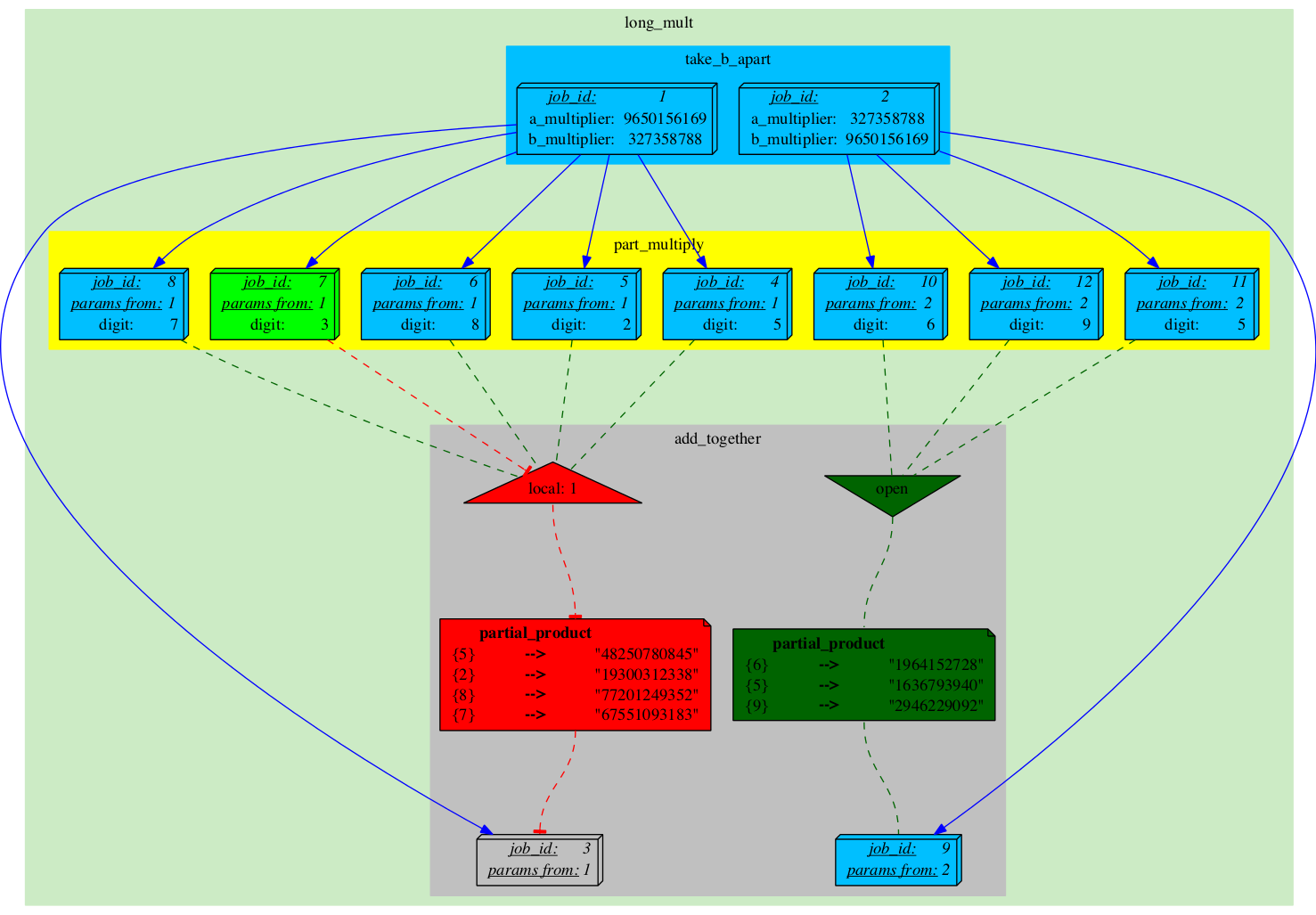

Finally, one of the Semaphores gets completely unblocked, which turns Job_9 into 'READY' state.

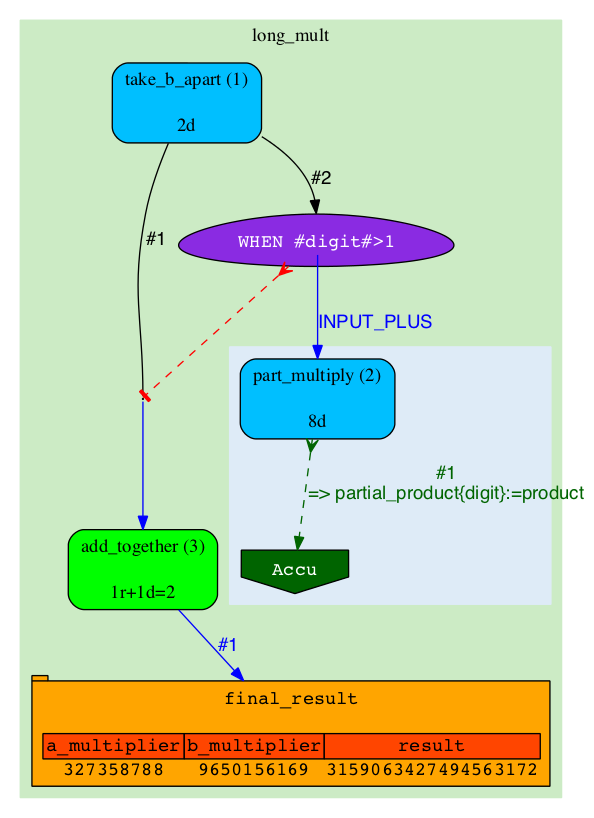

Job_9 gets executed.

The last 'part_multiply' job gets run...

|

|

|