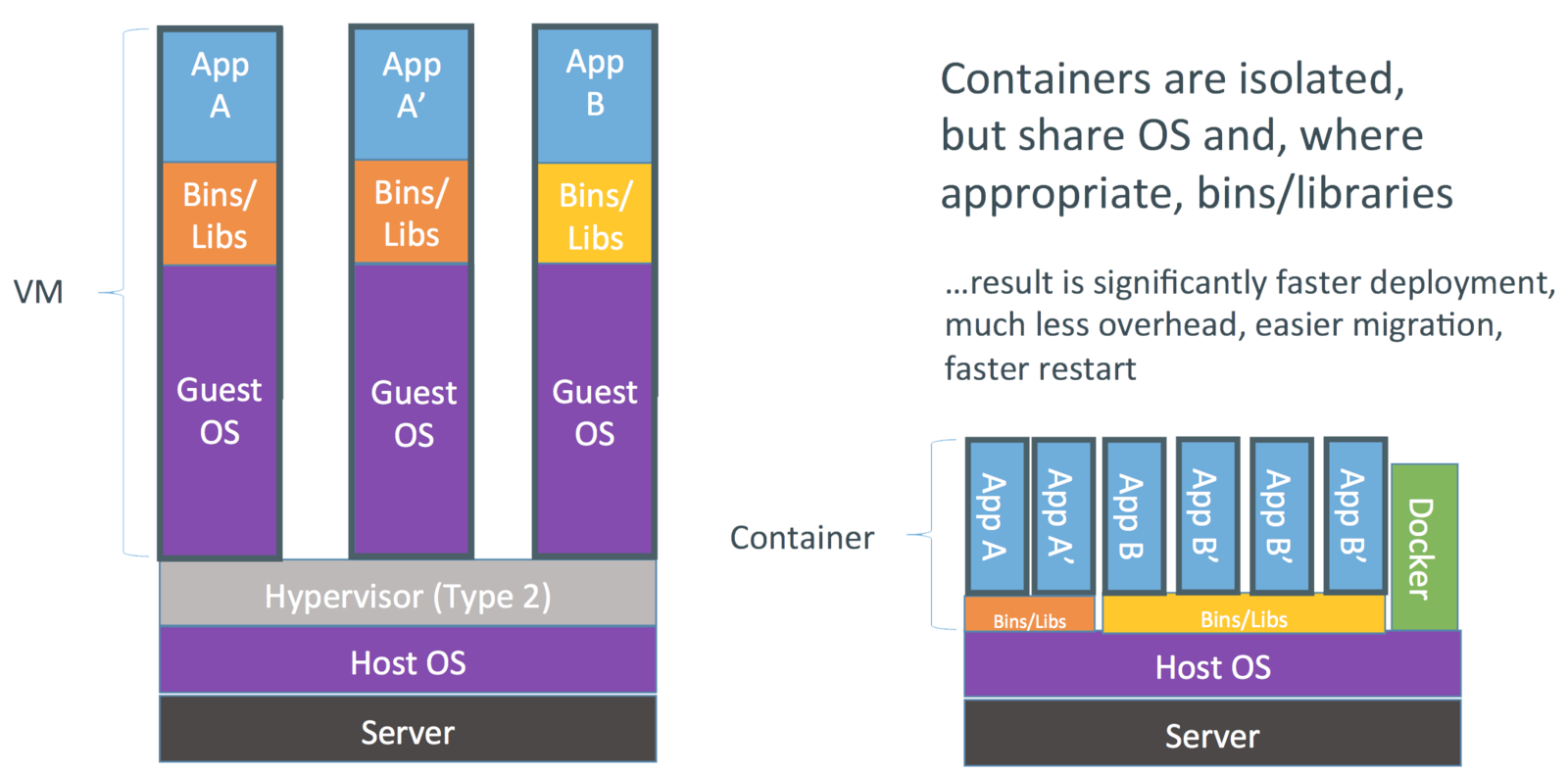

class: center, middle, inverse, title-slide # R + Docker: Why & How ### Clayton Yochum ### November 9, 2017 --- layout: true background-color: #fafaef <div class="my-footer"><img src="mc_logo_rectangle.png" style="height: 30px;"/></div> --- ## What is Docker? -- - Something people _will not_ shut the hell up about -- (myself included) -- - docker.com: -- "Docker is the world's leading software containerization platform" -- - _containerization_? -- **My Take** -- -- Docker is a tool for _packaging software_ so it: -- - is easy to run the same way in different places -- .pull-right[(portable)] -- - is easy to throw away and start over -- .pull-right[(disposable)] -- - runs the same way tomorrow, next month, next year -- .pull-right[(reproducible)] --- class: center, middle ## We increasingly need to not just _write_ code, but _run it_ somewhere else -- ## Managing servers is a lot of work, and we want to spend as little time doing it as possible -- ## **Docker lets me be lazy and worry less about the systems running our code** --- ## If only we knew sooner... -- Issues we could have solved with Docker: -- - We have a shiny app on AWS we can no longer run locally -- - We have another shiny app that takes 1-2 hours to re-deploy when things go wrong -- - We've delivered code that took our client multiple hours to find and install non-R dependencies for -- Packrat is ~~great~~okay at managing R package dependencies, but Docker makes it easy to handle _the other stuff_: -- - system-level dependencies (java, curl, ssl, etc.) -- - unpackaged R code (analysis, modeling, etc.) -- - R version --- ## Okay, so what's a container? -- - It's where your code goes! -- - _Not_ a new concept; been in linux for decades -- (but Docker started in 2013) -- - _Containerizing_ some code mean packing it up into a single object that has both your code and everything your code needs to run -- - We call those objects container _images_ -- - You need special software (docker) to run images, but it should run exactly the same anywhere you run it -- - A _container_ is an instance of an image deployed on some computer --- ## Did you just describe a virtual machine? -- Yeah, pretty much! But: -- - Containers are more _lightweight_ and _portable_ than VM's -- - Containers are easier to deploy, and you can fit more on one system -- - It's easier to tell a container what you need than to tell a VM -- - Docker : containers :: Vagrant : virtual machines -- - VM's emulate a computer _down to the hardware_, but containers make more assumptions, and share common pieces between them ---  _source: Docker, Inc._ --- ## Why so damn popular? -- .pull-left[ In Software Development: - ensure consistency between dev/staging/production environments - makes "dev-ops" easier - many small pieces (microservices) are easier to evolve than one big piece (monolith) ] -- .pull-right[ In Data Science: - ensure the model runs when it's not on your computer - would rather build models than babysit servers - single container can have many different pieces like R, Python, Jupyter, PyTorch, TensorFlow, etc. ] --- ## How do we use it? -- .pull-left[ You'll Need: - docker installed (possibly in a VM) - access to the terminal - network/internet access ] -- .pull-right[ Steps: 1. write a Dockerfile (what your code needs) 2. build an image from the Dockerfile 3. run that image somewhere ] -- [The Rocker group](https://github.com/rocker-org) builds & maintains awesome R images on [Docker Hub](hub.docker.com), including: -- - plain R -- - R + RStudio -- - R + RStudio + Tidyverse -- - R + Shiny Server -- - R + tex and related stuff -- Using one of these let's us skip right to step 3, running the image. --- ## Running RStudio Server in a container -- If you have docker setup, it's one command to start running RStudio Server with all the Tidyverse pre-installed: -- ```bash docker run -d -p 80:8787 rocker/tidyverse ``` -- This will: -- 1. Download an image, layer-by-layer, from Docker Hub to your computer -- 2. Run that image, which includes starting RStudio Server inside the image -- 3. Expose the RStudio port (8787) through the local machine's port 80 -- Now open a browser, go to `localhost` (or the IP of your VM), and login to RStudio Server with user/pass `rstudio`/`rstudio`! -- Sure, you already have R, RStudio, etc. setup _locally_, but how easy is it to get the same setup on a beefy AWS server? --- ## Other useful docker commands ```bash # list running containers docker ps # stop a container docker stop <name> # start a stopped container docker start <name> # list runnning AND stopped containers docker ps -a # remove a stopped container docker rm <name> # list images currently on system docker images # remove an image docker rmi <image-name> # can't remove unless all related containers are removed ``` --- ## Adding our own stuff For a recent client deliverable, we used that [`rocker/tidyverse`](https://github.com/rocker-org/rocker-versioned/blob/master/tidyverse/3.4.2/Dockerfile) image as a _base_, and added our own code to it by writing our own _Dockerfile_: -- ```Dockerfile FROM rocker/tidyverse:3.4.2 USER rstudio WORKDIR /home/rstudio COPY clientpackage ./clientpackage/ COPY holdout-files ./holdout-files/ COPY models ./models/ COPY predict-script.R README.Rmd report/report.Rmd ./ RUN R -e "devtools::install('clientpackage', dependencies = TRUE)" USER root ``` -- Then we setup Gitlab's CI tools so every time the code updates, Gitlab will build the image for us! --- ## Adding our own stuff -- Last time we did a bunch of modeling for a certain client, we were using the R package `h2o`, which requires Java to run. -- I made a [small Dockerfile](https://github.com/MethodsConsultants/tidyverse-h2o/blob/master/Dockerfile) to add that on top of the `rocker/tidyverse` image: ```Dockerfile FROM rocker/tidyverse:latest RUN apt-get update -qq \ && apt-get -y --no-install-recommends install \ default-jdk \ default-jre \ && R CMD javareconf \ && install2.r --error \ --repos 'http://cran.rstudio.com' \ h2o ``` -- Because this is public on our Github, I [configured Docker Hub](https://hub.docker.com/r/methodsconsultants/tidyverse-h2o/) to automatically re-build the image anytime the Dockerfile _or the base image_ changes -- , _for free_! -- Then anyone connected to the internet can use this as easily as we used `rocker/tidyverse`: ```bash docker run -d -p 80:8787 methodsconsultants/tidyverse-h2o ``` --- ## Adding stuff outside the Dockerfile -- When doing research inside a container, we might not want to write our own Dockerfile that copies our code and/or data into the image -- We instead use the `-v` option of `docker run` to make files on our computer available to the container: ```bash docker run -d -p 80:8787 -v ~/someproject:/home/rstudio/someproject rocker/tidyverse ``` -- Then if you add/delete/edit those files from within docker, the changes will happen on the host filesystem -- For this specific image, t's important to attach our local volume to the home of user `rstudio` since that's the only user available by default -- We could use environment variables to make a different user and/or set a password (again, image-specific) --- ## Things we didn't have time for -- - networking -- - environment variables -- - why the layered approach is so awesome -- - how to combine with CI tools -- - pulling images from non-Docker Hub locations (e.g. private Gitlab registries) -- - using `docker machine` to make docker hosts for us -- - combining Docker with Packrat -- - _orchestrating_ containers with Kubernetes, Docker Swarm, AWS ECS --- ## Parting thoughts -- - Getting your Dockerfile right can take more work up-front, but is often worth the time saved down the line -- -- especially when combined with CI tools -- - If you figure out how to install something _once_, you don't have to sweat it again -- - Having code that runs _here_ but not _there_ is so last decade -- - **Docker gives you portability _now_ and reproducibility _later_.**