The future of big data in developmental science – Answering the big questions

Rick O. Gilmore

Support: NSF BCS-1147440, NSF BCS-1238599, NICHD U01-HD-076595

October 28, 2016

The future of big data in developmental science – Answering the big questions

Support: NSF BCS-1147440, NSF BCS-1238599, NICHD U01-HD-076595

Shonkoff, J. P., & Phillips, D. A. (Eds.). (2000). From neurons to neighborhoods: The science of early childhood development. National Academies Press.

"We have empirically assessed the distribution of published effect sizes and estimated power by extracting more than 100,000 statistical records from about 10,000 cognitive neuroscience and psychology papers published during the past 5 years…False report probability is likely to exceed 50% for the whole literature. In light of our findings the recently reported low replication success in psychology is realistic and worse performance may be expected for cognitive neuroscience."

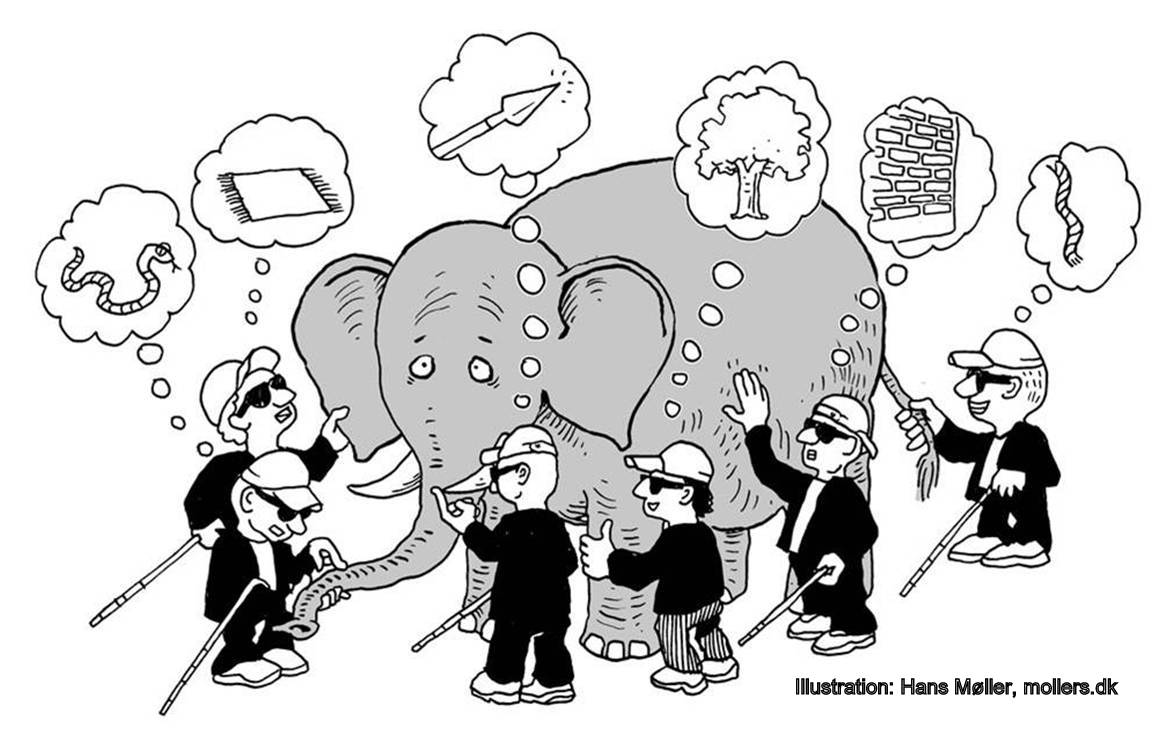

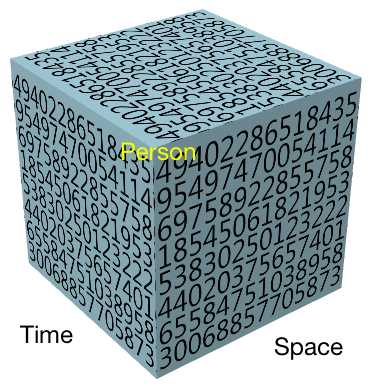

Gilmore, R. O. (2016). From big data to deep insight in developmental science. Wiley Interdisciplinary Reviews: Cognitive Science, 7(2), 112–126. https://doi.org/10.1002/wcs.1379

"You can checkout any time you like, but you can never leave."

Tamis-LeMonda, C. (2013). http://doi.org/10.17910/B7CC74.

Collaboration, Open Science. 2015. “Estimating the Reproducibility of Psychological.” Science 349 (6251): aac4716. doi:10.1126/science.aac4716.

Gilmore, Rick O. 2016. “From Big Data to Deep Insight in Developmental Science.” Wiley Interdisciplinary Reviews: Cognitive Science 7 (2): 112–26. doi:10.1002/wcs.1379.

Goodman, Steven N., Daniele Fanelli, and John P. A. Ioannidis. 2016. “What Does Research Reproducibility Mean?” Science Translational Medicine 8 (341): 341ps12–341ps12. doi:10.1126/scitranslmed.aaf5027.

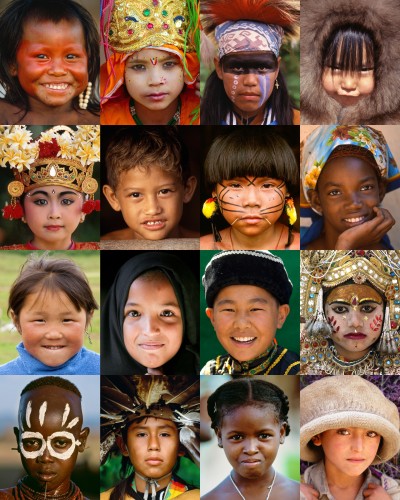

Henrich, Joseph, Steven J. Heine, and Ara Norenzayan. 2010. “The Weirdest People in the World?” The Behavioral and Brain Sciences 33 (2-3): 61–83; discussion 83–135. doi:10.1017/S0140525X0999152X.

Ioannidis, John P. A. 2005. “Why Most Published Research Findings Are False.” PLoS Med 2 (8): e124. doi:10.1371/journal.pmed.0020124.

Maxwell, Scott E. 2004. “The Persistence of Underpowered Studies in Psychological Research: Causes, Consequences, and Remedies.” Psychological Methods 9 (2): 147–63. doi:10.1037/1082-989X.9.2.147.

Szucs, Denes, and John PA Ioannidis. 2016. “Empirical Assessment of Published Effect Sizes and Power in the Recent Cognitive Neuroscience and Psychology Literature.” BioRxiv, August, 071530. doi:10.1101/071530.